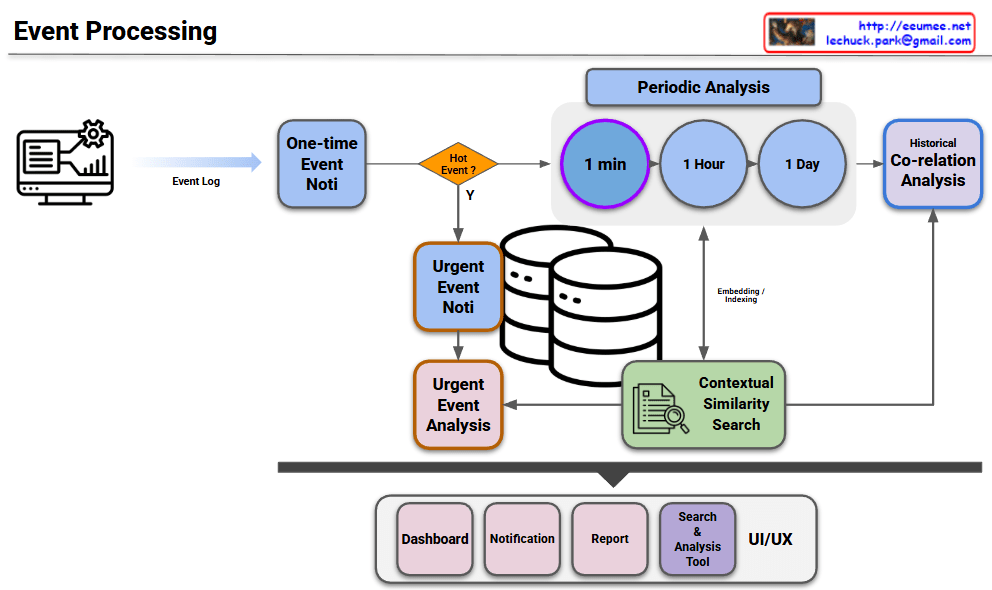

This diagram illustrates a workflow that handles system logs/events by dividing them into real-time urgent responses and periodic deep analysis.

1. Data Ingestion & Filtering

- Event Log → One-time Event Noti: The process begins with incoming event logs triggering an initial, single-instance notification.

- Hot Event Decision: A decision node determines if the event is critical (“Hot Event?”). This splits the workflow into two distinct paths: a Hot Path for emergencies and an Analytical Path for deeper insights.

2. Hot Path (Real-time Response)

- Urgent Event Noti & Analysis: If identified as a “Hot Event,” the system immediately issues an urgent notification and performs an urgent analysis while persisting the data to the database. This path appears designed to minimize MTTD (Mean Time To Detect) for critical failures.

3. Periodic & Contextual Analysis (AIOps Layer)

This section indicates a shift from simple monitoring to intelligent AIOps.

- Periodic Analysis: Events are aggregated and analyzed over fixed time windows (1 min, 1 Hour, 1 Day). The purple highlight on “1 min” suggests the current focus is on short-term trend analysis.

- Contextual Similarity Search: This is a critical advanced feature. By explicitly mentioning “Embedding / Indexing,” the architecture suggests the use of Vector Search (likely via a Vector DB). It implies the system doesn’t just match keywords but understands the semantic context of an error to find similar past cases.

- Historical Co-relation Analysis: This module synthesizes the periodic trends and similarity search results to correlate the current event with historical patterns, aiding in Root Cause Analysis (RCA).

4. User Interface (UI/UX)

The processed insights are delivered to the user through four channels:

- Dashboard: High-level status visualization.

- Notification: Alerts for urgent issues.

- Report: Summarized periodic findings.

- Search & Analysis Tool: A tool for granular log investigation.

Summary

- Hybrid Architecture: Efficiently separates critical “Hot Event” handling (Real-time) from deep “Periodic Analysis” (Batch) to balance speed and insight.

- Semantic Intelligence: Incorporates “Contextual Similarity Search” using Embeddings, enabling the system to identify issues based on meaning rather than just keywords.

- Holistic Observability: interconnected modules (Urgent, Periodic, Historical) feed into a comprehensive UI/UX to support rapid decision-making and post-mortem analysis.

#EventProcessing #SystemArchitecture #VectorSearch #Observability #RCA