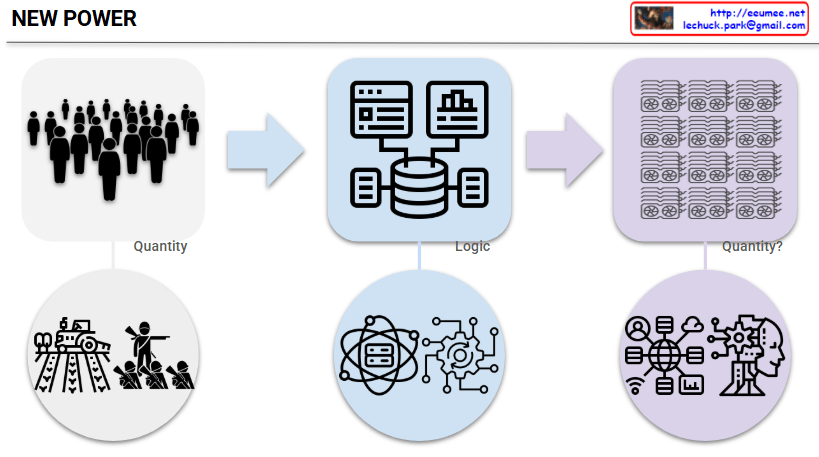

This image titled “NEW POWER” illustrates the paradigm shift in power structures in modern society.

Left Side (Past Power Structure):

- Top: Silhouettes of people representing traditional hierarchical organizational structures

- Bottom: Factories, smokestacks, and workers symbolizing the industrial age

- Characteristic: “Quantity” (volume/scale) centered power

Center (Transition Process):

- Top: Icons representing databases and digital interfaces

- Bottom: Technical elements symbolizing networks and connectivity

- Characteristic: “Logic” based systems

Right Side (New Power Structure):

- Top: Grid-like array representing massive GPU clusters – the core computing resources of the AI era

- Bottom: Icons symbolizing AI, cloud computing, data analytics, and other modern technologies

- Characteristic: “Quantity?” (The return of quantitative competition?) – A new dimension of quantitative competition in the GPU era

This diagram illustrates a fascinating return in power structures. While efficiency, innovation, and network effects – these ‘logical’ elements – were important during the digital transition period, the ‘quantitative competition’ has returned as the core with the full advent of the AI era.

In other words, rather than smart algorithms or creative ideas, how many GPUs one can secure and operate has once again become the decisive competitive advantage. Just as the number of factories and machines determined national power during the Industrial Revolution, the message suggests that we’ve entered a new era of ‘quantitative warfare’ where GPU capacity determines dominance in the AI age.

With Claude