With a Claude’s Help

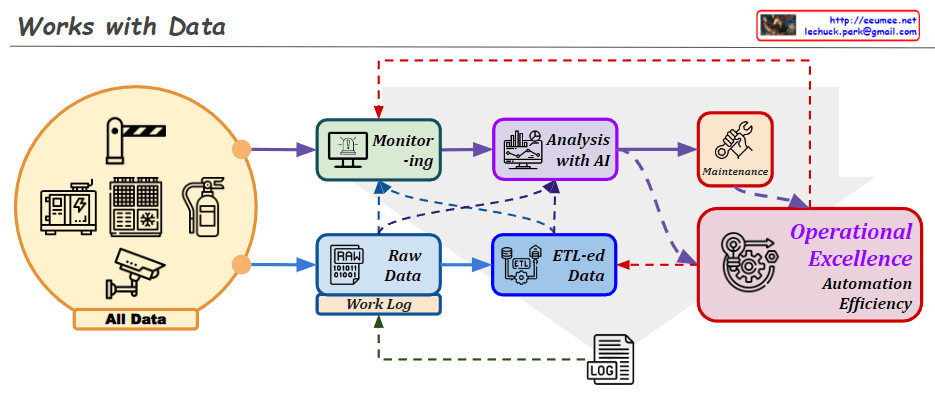

- Normal State:

- Represented by a gear icon with a green checkmark

- Indicates system operating under normal conditions

- Initial state of the monitoring process

- Anomaly Detection:

- Shown with a magnifying glass and graph patterns

- The graph patterns are more clearly visualized than before

- Represents the phase where deviations from normal patterns are detected

- Abnormal State:

- Depicted by a human figure with warning indicators

- Represents confirmed abnormal conditions requiring intervention

- Links directly to action steps

- Analysis and Response Process:

- Comparison with normal: Shown through A/B document comparison icons

- Analysis: Data examination phase

- predictive Action: Now written in lowercase, indicating predicted response measures

- Recovery Action: Implementation of actual recovery measures

- Learning Feedback:

- Shows how lessons from recovery actions are fed back into the system

- Creates a continuous improvement loop

- Connects recovery actions back to normal operations

The workflow continues to effectively illustrate the complete operational cycle, from monitoring and detection through analysis, response, and continuous learning. It demonstrates a systematic approach to handling operational anomalies and maintaining system stability.