AI Data Center Cooling System Architecture Analysis

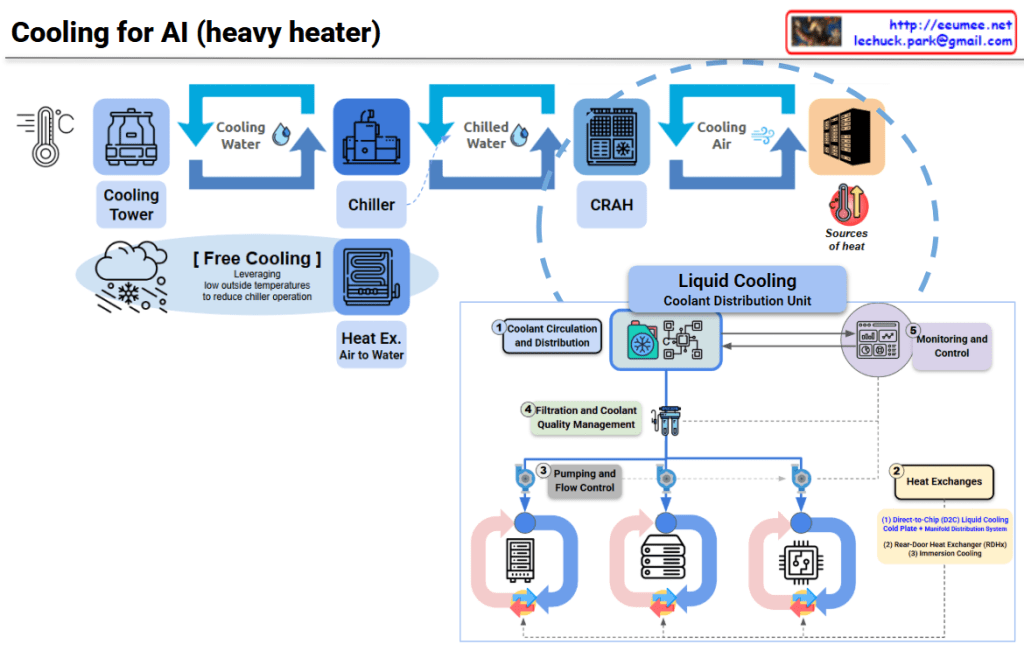

This diagram illustrates the evolution of data center cooling systems designed for high-heat AI workloads.

Traditional Cooling System (Top Section)

Three-Stage Cooling Process:

- Cooling Tower – Uses ambient air to cool water

- Chiller – Further refrigerates the cooled water

- CRAH (Computer Room Air Handler) – Distributes cold air to the server room

Free Cooling option is shown, which reduces chiller operation by leveraging low outside temperatures for energy savings.

New Approach for AI DC: Liquid Cooling System (Bottom Section)

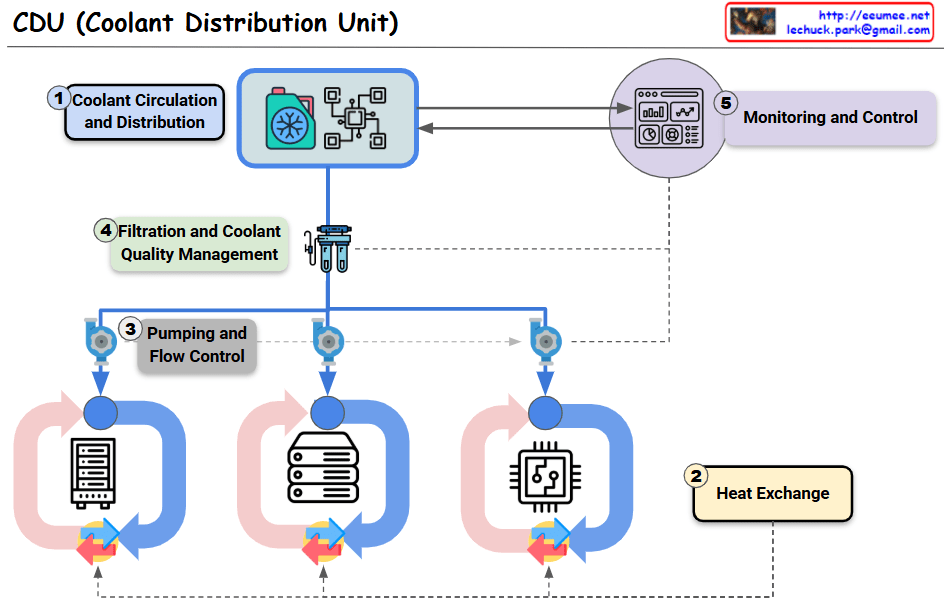

To address extreme heat generation from high-density AI chips, a CDU (Coolant Distribution Unit) based liquid cooling system has been introduced.

Key Components:

① Coolant Circulation and Distribution

- Direct coolant circulation system to servers

② Heat Exchanges (Two Methods)

- Direct-to-Chip (D2C) Liquid Cooling: Cold plate with manifold distribution system directly contacting chips

- Rear-Door Heat Exchanger (RDHx): Heat exchanger mounted on rack rear door (immersion cooling)

③ Pumping and Flow Control

- Pumps and flow control for coolant circulation

④ Filtration and Coolant Quality Management

- Maintains coolant quality and removes contaminants

⑤ Monitoring and Control

- Real-time monitoring and cooling performance control

Critical Differences

Traditional Method: Air cooling → Indirect, suitable for low-density workloads

AI DC Method: Liquid cooling → Direct, high-efficiency, capable of handling high TDP (Thermal Design Power) of AI chips

Liquid has approximately 25x better heat transfer efficiency than air, making it effective for cooling AI accelerators (GPUs, TPUs) that generate hundreds of watts to kilowatt-level heat.

Summary:

- Traditional data centers use air-based cooling (Cooling Tower → Chiller → CRAH), suitable for standard workloads.

- AI data centers require liquid cooling with CDU systems due to extreme heat from high-density AI chips.

- Liquid cooling offers direct-to-chip heat removal with 25x better thermal efficiency than air, supporting kW-level heat dissipation.

#AIDataCenter #LiquidCooling #DataCenterInfrastructure #CDU #ThermalManagement #DirectToChip #AIInfrastructure #GreenDataCenter #HeatDissipation #HyperscaleComputing #AIWorkload #DataCenterCooling #ImmersionCooling #EnergyEfficiency #NextGenDataCenter

With Claude