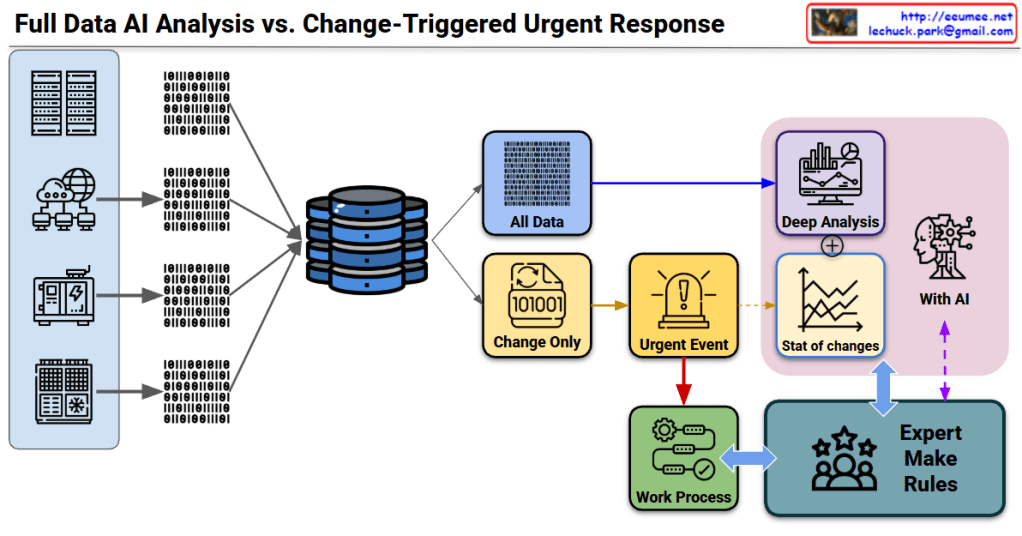

Image Analysis: Full Data AI Analysis vs. Change-Triggered Urgent Response

This diagram illustrates a system architecture comparing two core strategies for data processing.

🎯 Core 1: Two Data Processing Approaches

Approach A: Full Data Processing (Analysis)

- All Data path (blue)

- Collects and comprehensively analyzes all data

- Performs in-depth analysis through Deep Analysis

- AI-powered statistical change (Stat of changes) analysis

- Characteristics: Identifies overall patterns, trends, and correlations

Approach B: Separate Change Detection Processing

- Change Only path (yellow)

- Selectively detects only changes

- Extracts and processes only deltas (differences)

- Characteristics: Fast response time, efficient resource utilization

🔥 Core 2: Analysis→Urgent Response→Expert Processing Flow

Stage 1: Analysis

- Full Data Analysis: AI-based Deep Analysis

- Change Detection: Change Only monitoring

Stage 2: Urgent Response (Urgent Event)

- Immediate alert generation when changes detected (⚠️ Urgent Event)

- Automated primary response process execution

- Direct linkage to Work Process

Stage 3: Expert Processing (Expert Make Rules)

- Human expert intervention

- Integrated review of AI analysis results + urgent event information

- Creation and modification of situation-appropriate rules

- Work Process optimization

🔄 Integrated Process Flow

[Data Collection]

↓

[Path Bifurcation]

├─→ [All Data] → [Deep Analysis] ─┐

│ ├→ [AI Statistical Analysis]

└─→ [Change Only] → [Urgent Event]─┘

↓

[Work Process] ↔ [Expert Make Rules]

↑_____________↓

(Feedback loop with AI)

💡 Core System Value

- Dual Processing Strategy: Stability (full analysis) + Agility (change detection)

- 3-Stage Response System: Automated analysis → Urgent process → Expert judgment

- AI + Human Collaboration: Combines AI analytical power with human expert judgment

- Continuous Improvement: Virtuous cycle where expert rules feed back into AI learning

This system is an architecture optimized for environments where real-time response is essential while expert judgment remains critical (manufacturing, infrastructure operations, security monitoring, etc.).

Summary

- Dual-path system: Comprehensive full data analysis (stability) + selective change detection (speed) working in parallel

- Three-tier response: AI automated analysis triggers urgent events, followed by work processes and expert rule refinement

- Human-AI synergy: Continuous improvement loop where expert knowledge enhances AI capabilities while AI insights inform expert decisions

#DataArchitecture #AIAnalysis #EventDrivenArchitecture #RealTimeMonitoring #HybridProcessing #ExpertSystems #ChangeDetection #UrgentResponse #IndustrialAI #SmartMonitoring #DataProcessing #AIHumanCollaboration #PredictiveMaintenance #IoTArchitecture #EnterpriseAI