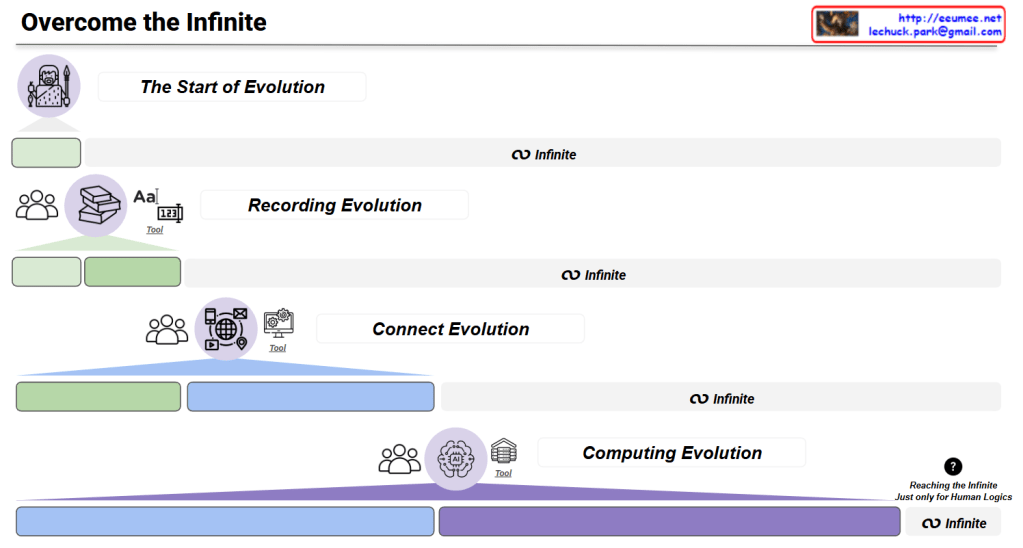

This image illustrates the dramatic growth in computing performance and data throughput from the Internet era to the AI/LLM era.

Key Development Stages

1. Internet Era

- 10 TWh (terawatt-hours) power consumption

- 2 PB/day (petabytes/day) data processing

- 1K DC (1,000 data centers)

- PUE 3.0 (Power Usage Effectiveness)

2. Mobile & Cloud Era

- 200 TWh (20x increase)

- 20,000 PB/day (10,000x increase)

- 4K DC (4x increase)

- PUE 1.8 (improved efficiency)

3. AI/LLM (Transformer) Era – “Now Here?” point

- 400+ TWh (40x additional increase)

- 1,000,000,000 PB/day = 1 billion PB/day (500,000x increase)

- 12K DC (12x increase)

- PUE 1.4 (further improved efficiency)

Summary

The chart demonstrates unprecedented exponential growth in data processing and power consumption driven by AI and Large Language Models. While data center efficiency (PUE) has improved significantly, the sheer scale of computational demands has skyrocketed. This visualization emphasizes the massive infrastructure requirements that modern AI systems necessitate.

#AI #LLM #DataCenter #CloudComputing #MachineLearning #ArtificialIntelligence #BigData #Transformer #DeepLearning #AIInfrastructure #TechTrends #DigitalTransformation #ComputingPower #DataProcessing #EnergyEfficiency