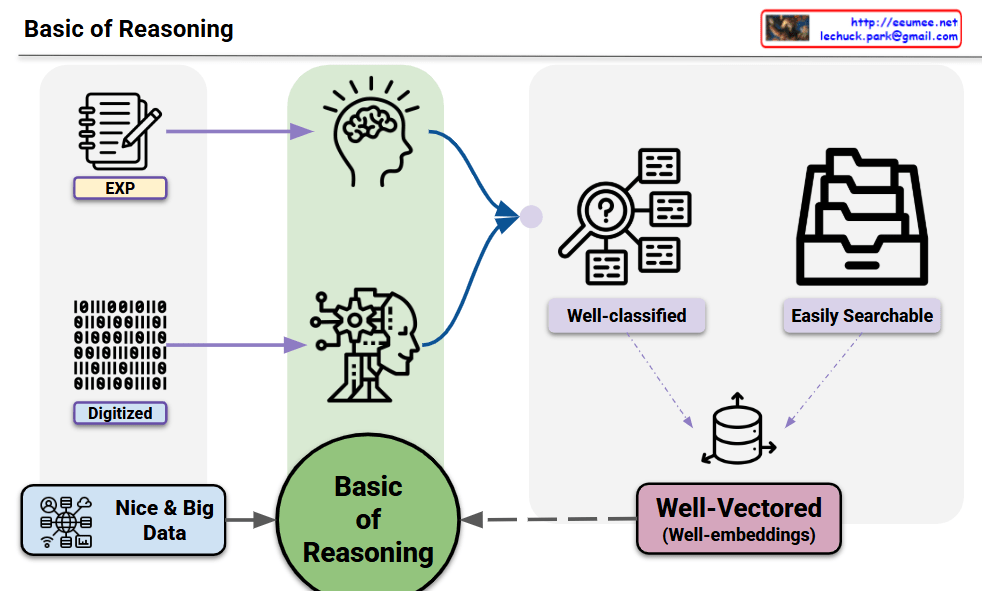

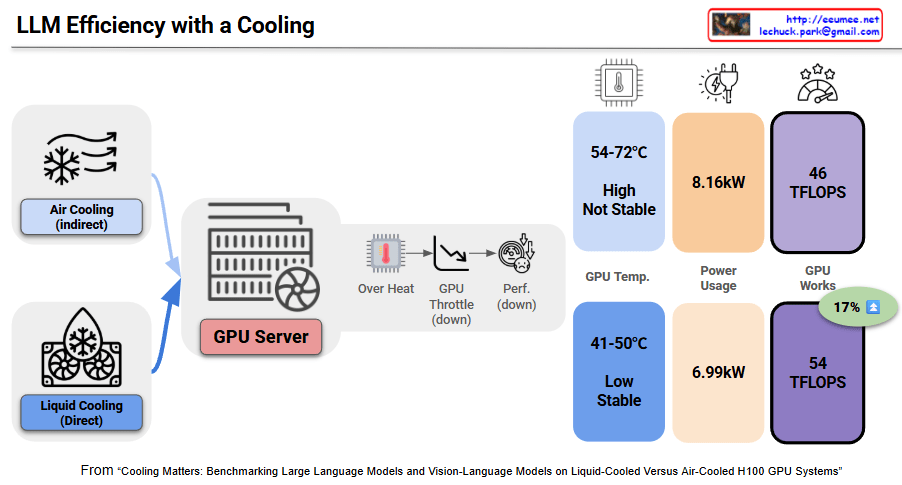

This image demonstrates the critical impact of cooling stability on both LLM performance and energy efficiency in GPU servers through benchmark results.

Cascading Effects of Unstable Cooling

Problems with Unstable Air Cooling:

- GPU Temperature: 54-72°C (high and unstable)

- Thermal throttling occurs – where GPUs automatically reduce clock speeds to prevent overheating, leading to significant performance degradation

- Result: Double penalty of reduced performance + increased power consumption

Energy Efficiency Impact:

- Power Consumption: 8.16kW (high)

- Performance: 46 TFLOPS (degraded)

- Energy Efficiency: 5.6 TFLOPS/kW (poor performance-to-power ratio)

Benefits of Stable Liquid Cooling

Temperature Stability Achievement:

- GPU Temperature: 41-50°C (low and stable)

- No thermal throttling → sustained optimal performance

Energy Efficiency Improvement:

- Power Consumption: 6.99kW (14% reduction)

- Performance: 54 TFLOPS (17% improvement)

- Energy Efficiency: 7.7 TFLOPS/kW (38% improvement)

Core Mechanisms: How Cooling Affects Energy Efficiency

- Thermal Throttling Prevention: Stable cooling allows GPUs to maintain peak performance continuously

- Power Efficiency Optimization: Eliminates inefficient power consumption caused by overheating

- Performance Consistency: Unstable cooling can cause GPUs to use 50% of power budget while delivering only 25% performance

Advanced cooling systems can achieve energy savings ranging from 17% to 23% compared to traditional methods. This benchmark paradoxically shows that proper cooling investment dramatically improves overall energy efficiency.

Final Summary

Unstable cooling triggers thermal throttling that simultaneously degrades LLM performance while increasing power consumption, creating a dual efficiency loss. Stable liquid cooling achieves 17% performance gains and 14% power savings simultaneously, improving energy efficiency by 38%. In AI infrastructure, adequate cooling investment is essential for optimizing both performance and energy efficiency.

With Claude