LLM Operations System Analysis

This diagram illustrates the architecture of an LLM Operations (LLMOps) system, demonstrating how Large Language Models are deployed and operated in industrial settings.

Key Components and Data Flow

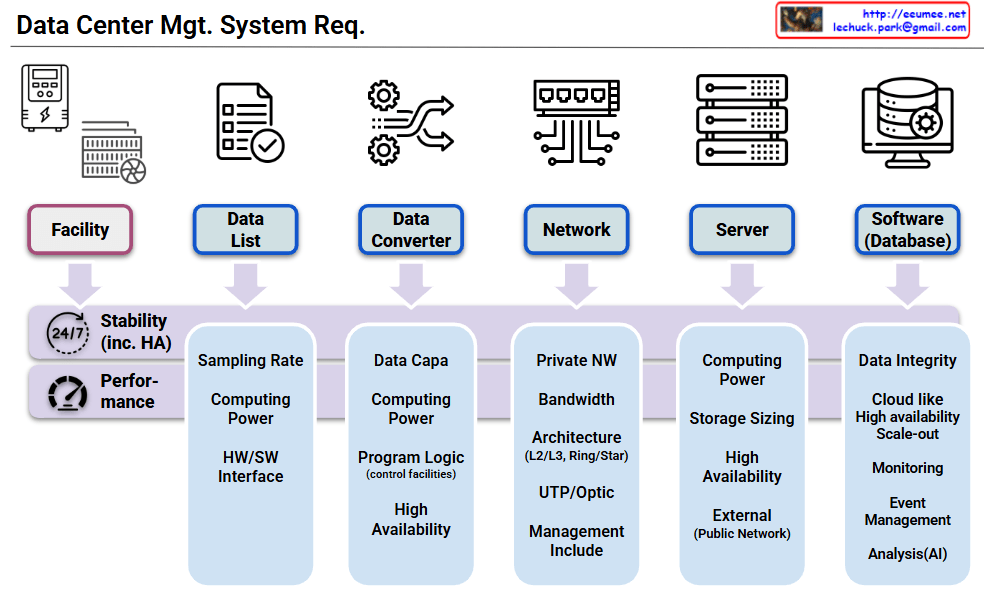

1. Data Input Sources (3 Categories)

- Facility: Digitized sensor data that gets detected and generates alert/event logs

- Manual: Equipment manuals and technical documentation

- Experience: Operational manuals including SOP/MOP/EOP (Standard/Maintenance/Emergency Operating Procedures)

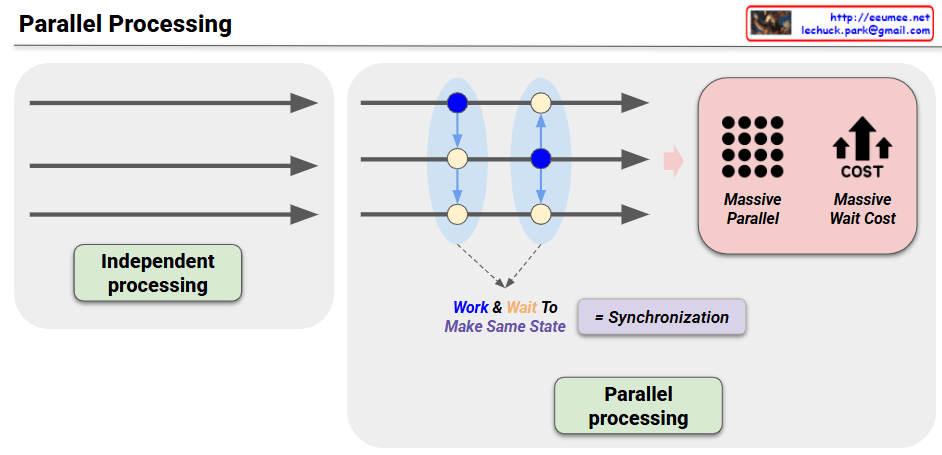

2. Central Processing System

- RAG (Retrieval-Augmented Generation): A central hub that integrates and processes all incoming data

- Facility data is visualized through metrics and charts for monitoring purposes

3. LLM Operations

- The central LLM synthesizes all information to provide intelligent operational support

- Interactive interface enables user communication and queries

4. Final Output and Control

- Dashboard for data visualization and monitoring

- AI chatbot for real-time operational assistance

- Operator Control: The bottom section shows checkmark (✓) and X-mark (✗) buttons along with an operator icon, indicating that final decision-making authority remains with human operators

System Characteristics

This system represents a smart factory solution that integrates AI into traditional industrial operations, providing comprehensive management from real-time data monitoring to operational manual utilization.

The key principle is that while AI provides comprehensive analysis and recommendations, the final operational decisions and approvals still rest with human operators. This is clearly represented through the operator icon and approval/rejection buttons at the bottom of the diagram.

This demonstrates a realistic and desirable AI operational model that emphasizes safety, accountability, and the importance of human judgment in unpredictable situations.

With Claude