Technical Analysis: AI Load & Weak Grid Interaction

The integration of massive AI workloads into a Weak Grid (SCR:Short Circuit Ratio < 3) creates a high-risk environment where electrical Transients can escalate into systemic failures.

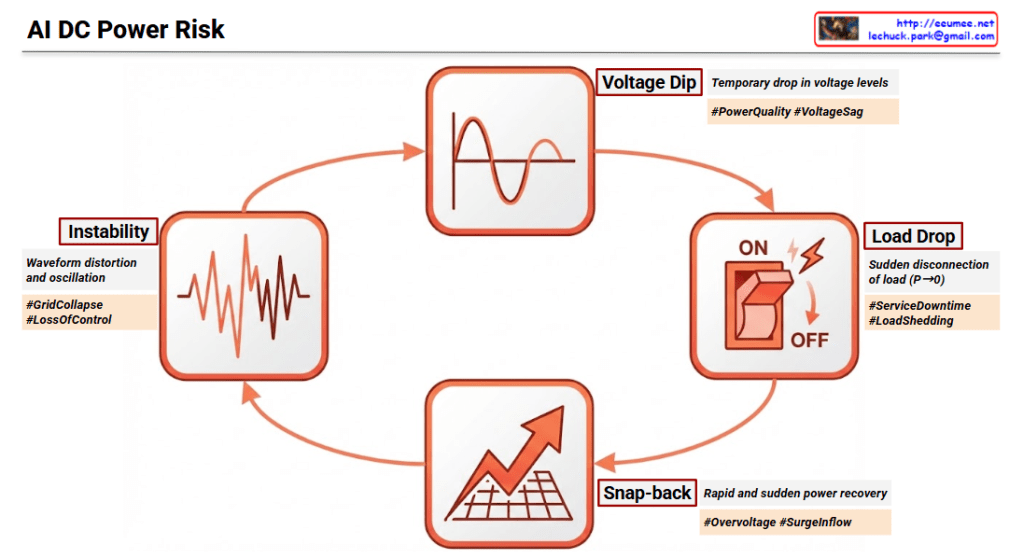

1. Voltage Dip (Transient Voltage Sag)

- Mechanism: AI workloads are characterized by Step Power Changes and Pulse-type Profiles. When these massive loads activate simultaneously, they cause an immediate Transient Voltage Sag in a weak grid due to high impedance.

- Impact: This compromises Power Quality, leading to potential malfunctions in voltage-sensitive AI hardware.

2. Load Drop (Transient Load Rejection)

- Mechanism: If the voltage sag exceeds safety thresholds, protection systems trigger Load Rejection, causing the power consumption to plummet to zero (P -> 0).

- Impact: This results in Service Downtime and creates a massive power imbalance in the grid, often referred to as Load Shedding.

3. Snap-back (Transient Recovery & Inrush)

- Mechanism: As the grid attempts to recover or the load is re-engaged, it creates a Transient Recovery Voltage (TRV).

- Impact: This phase often sees Overvoltage (Overshoot) and a massive Surge Inflow (Inrush Current), which places extreme electrical stress on power components and can damage sensitive circuitry.

4. Instability (Dynamic & Harmonic Oscillation)

- Mechanism: The repetition of sags and surges leads to Dynamic Oscillation. The control systems of power converters may lose synchronization with the grid frequency.

- Impact: The result is severe Waveform Distortion, Loss of Control, and eventually a total Grid Collapse (Blackout).

Key Insight (NERC 2025 Warning)

The North American Electric Reliability Corporation (NERC) warns that the reduction of voltage-sensitive loads and the rise of periodic, pulse-like AI workloads are primary drivers of modern grid instability.

Summary

- AI Load Dynamics: Rapid step-load changes in AI data centers act as a “shock” to weak grids, triggering a self-reinforcing cycle of electrical failure.

- Transient Progression: The cycle moves from a Voltage Sag to a Load Trip, followed by a damaging Power Surge, eventually leading to non-damped Oscillations.

- Strategic Necessity: To break this cycle, data centers must implement advanced solutions like Grid-forming Inverters or Fast-acting BESS to provide synthetic inertia and voltage support.

#PowerTransients #WeakGrid #AIDataCenter #GridStability #NERC2025 #VoltageSag #LoadShedding #ElectricalEngineering #AIInfrastructure #SmartGrid #PowerQuality

With Gemini