This image illustrates a data monitoring system for an AI data center server room. Titled “Data in AI DC Server Room,” it depicts the relationships between key elements being monitored in the data center.

The system consists of four main components, each with detailed metrics:

- GPU Workload – Right center

- Computing Load: GPU utilization rate (%) and type of computational tasks (training vs. inference)

- Power Consumption: Real-time power consumption of each GPU

– Example: NVIDIA H100 GPU consumes up to 700W

- Workload Pattern: Periodicity of workload (peak/off-peak times) and predictability

- Memory Usage: GPU memory usage patterns (e.g., HBM3 memory bandwidth usage)

- Power Infrastructure – Left

- Power Usage: Real-time power output and efficiency of UPS, PDU, and transformers

- Power Quality: Voltage, frequency stability, and power loss rate

- Power Capacity: Types and proportions of supplied energy, ensuring sufficient power availability for current workload operations

- Cooling System – Right

- Cooling Device Status: Air-cooling fan speed (RPM), liquid cooling pump flow rate (LPM), and coolant temperature (°C)

- Environmental Conditions: Data center internal temperature, humidity, air pressure, and hot/cold zone temperatures – critical for server operations

- Cooling Efficiency: Power Usage Effectiveness (PUE) and proportion of power consumed by the cooling system

- Server/Rack – Top center

- Rack Power Density: Power consumption per rack (kW) – Example: GPU server racks range from 30 to 120 kW

- Temperature Profile: Temperature (°C) of GPUs, CPUs, memory modules, and heat distribution

- Server Status: Operational state of servers (active/standby) and workload distribution status

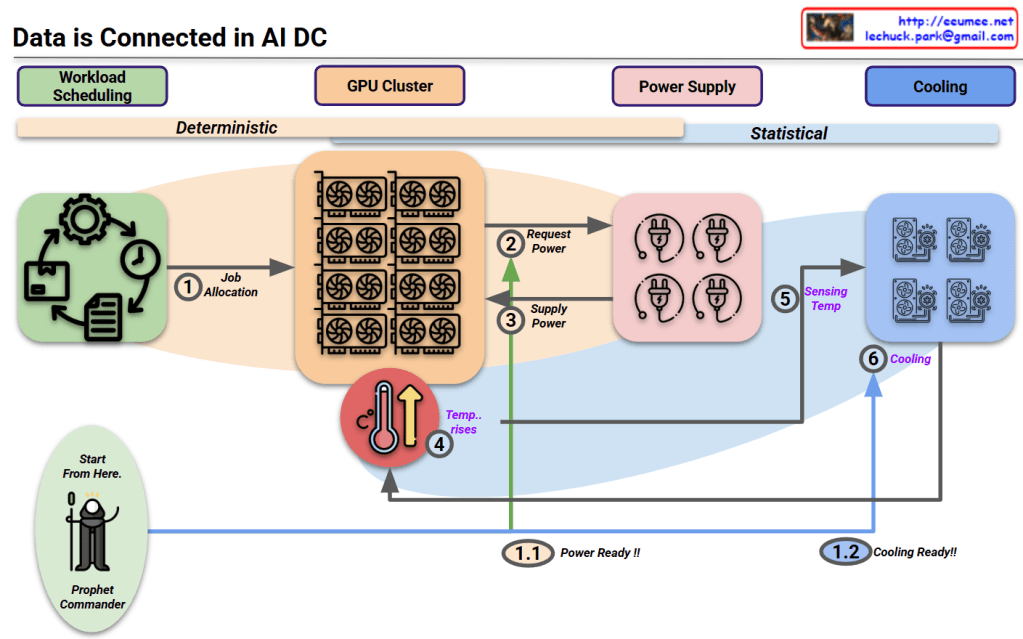

The workflow sequence indicated at the bottom of the diagram represents:

- ① GPU WORK: Initial execution of AI workloads – GPU computational tasks begin, generating system load

- ② with POWER USE: Increased power supply for GPU operations – Power demand increases with GPU workload, and power infrastructure responds accordingly

- ③ COOLING WORK: Cooling processes activated in response to heat generation

- Sensing: Temperature sensors detect server and rack thermal conditions, monitoring hot/cold zone temperature differentials

- Analysis: Analysis of collected temperature data, determining cooling requirements

- Action: Adjustment of cooling equipment (fan speed, coolant flow rate, etc. automatically regulated)

- ④ SERVER OK: Maintenance of normal server operation through proper power supply and cooling – Temperature and power remain stable, allowing GPU workloads to continue running under optimal conditions

The arrows indicate data flow and interrelationships between systems, showing connections from power infrastructure to servers and from cooling systems to servers. This integrated system enables efficient and stable data center operation by detecting increased power demand and heat generation from GPU workloads, and adjusting cooling systems in real-time accordingly.

With Claude