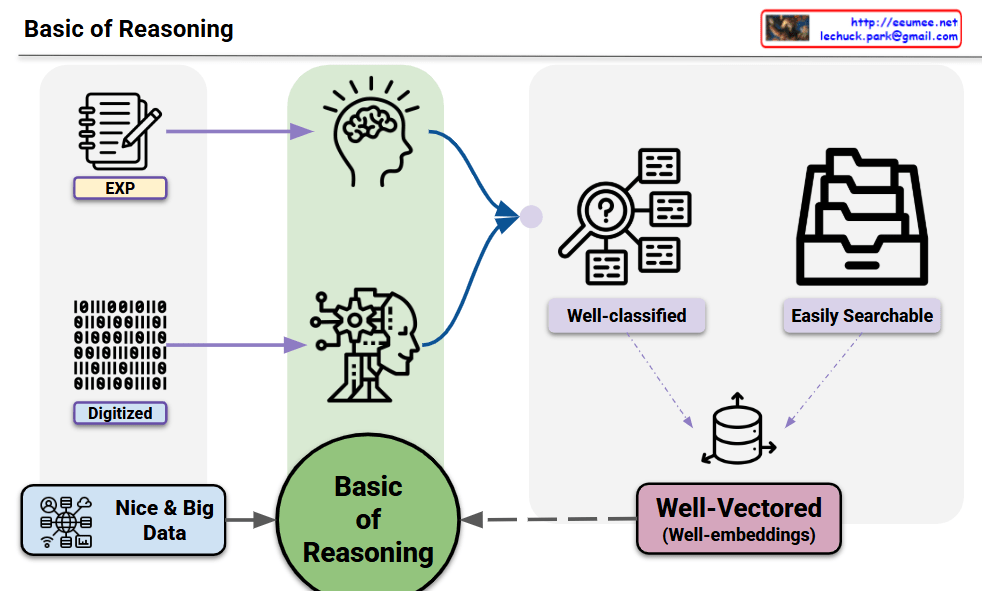

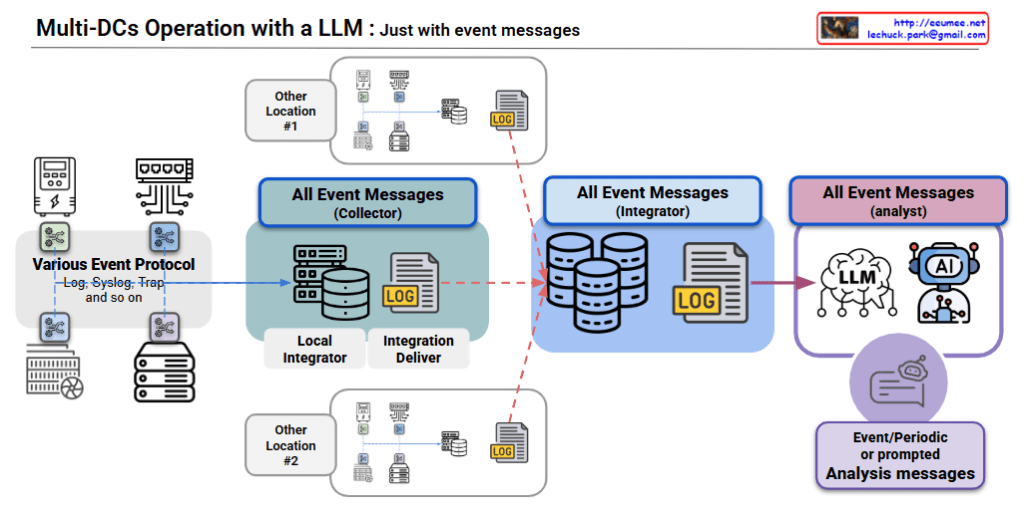

This diagram illustrates a Multi-Data Center Operations Architecture leveraging LLM (Large Language Model) with Event Messages.

Key Components

1. Data Collection Layer (Left Side)

- Collects data from various sources through multiple event protocols (Log, Syslog, Trap, etc.)

- Gathers event data from diverse servers and network equipment

2. Event Message Processing (Center)

- Collector: Comprises Local Integrator and Integration Deliver to process event messages

- Integrator: Manages and consolidates event messages in a multi-database environment

- Analyst: Utilizes AI/LLM to analyze collected event messages

3. Multi-Location Support

- Other Location #1 and #2 maintain identical structures for event data collection and processing

- All location data is consolidated for centralized analysis

4. AI-Powered Analysis (Right Side)

- LLM: Intelligently analyzes all collected event messages

- Event/Periodic or Prompted Analysis Messages: Generates automated alerts and reports based on analysis results

System Characteristics

This architecture represents a modern IT operations management solution that monitors and manages multi-data center environments using event messages. The system leverages LLM technology to intelligently analyze large volumes of log and event data, providing operational insights for enhanced data center management.

The key advantage is the unified approach to handling diverse event streams across multiple locations while utilizing AI capabilities for intelligent pattern recognition and automated response generation.

With Claude