Comprehensive Interpretation: Large Scale Network Driven Design

This document outlines the technical blueprint for “Network Co-Design,” explaining how network architecture must evolve to support massive AI workloads (specifically LLMs) by balancing Bandwidth and Latency.

Here is the breakdown from an architect’s perspective:

1. Structural Efficiency: MPFT & MRFT (The “Green” Section)

This section answers the question: “How do we efficiently cluster thousands of GPUs?”

- Massive Scalability: It proposes a Multi-Plane Fat-Tree (MPFT) structure using 400G InfiniBand (IB) switches, theoretically capable of scaling up to 16,384 GPUs. This mirrors the scale of NVIDIA SuperPods.

- Multi-Rail Architecture (MRFT): MRFT utilizes two distinct network planes. Think of this as adding a second level to a highway to double the lanes. It achieves higher bandwidth efficiency compared to traditional 2-tier Fat-Tree designs.

- Software Optimization (NCCL): The hardware (MRFT) is fully utilized by NCCL (NVIDIA Collective Communications Library). NCCL acts as the traffic controller, ensuring the expanded physical bandwidth is saturated efficiently.

- Latency Reduction (Packet Striping): The

QP & Prioritysection highlights a critical mechanism where a single Queue Pair (QP) stripes packets across multiple ports simultaneously. This parallel transmission significantly reduces latency. - Current Bottlenecks: The design acknowledges limitations, such as InfiniBand’s lack of native support for out-of-order packet placement and the time overhead incurred during inter-plane communication (requires internal forwarding).

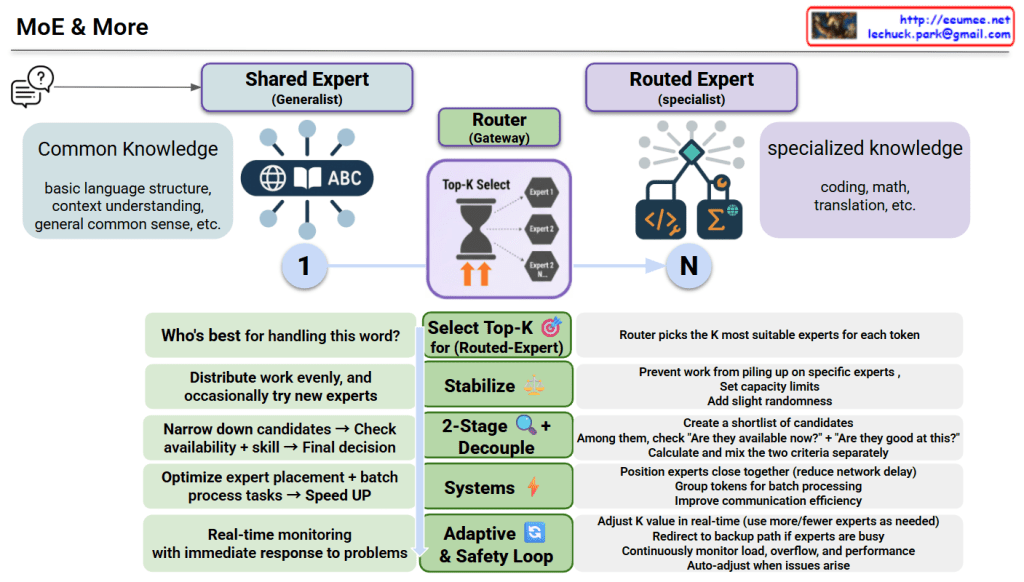

2. The Core of Performance: Low Latency & MoE (The “Blue” Section)

This section answers the question: “Why is low latency (and InfiniBand) non-negotiable?”

- Sensitivity of MoE Models: Modern Mixture of Experts (MoE) models rely heavily on “Expert Parallelism,” which triggers frequent All-to-all communication. If the network lags even by hundreds of microseconds, the entire system performance degrades fataly.

- RoCE vs. InfiniBand: The document draws a clear line. While RoCE (RDMA over Converged Ethernet) is cost-effective, InfiniBand (IB) is the superior choice for low-latency environments essential for AI training/inference.

- Surprising Latency Metrics: It highlights a specific scenario: for small data transfers (e.g., 64 Bytes), InfiniBand can be faster than intra-node NVLink (specifically during Cross Leaf communication), proving its dominance in minimizing end-to-end latency.

Summary

- Scalable Architecture: The Multi-Plane (MPFT) and Multi-Rail (MRFT) Fat-Tree designs, optimized with NCCL, maximize bandwidth efficiency to support massive clusters of up to 16k GPUs.

- Latency Criticality: Modern AI workloads like Mixture of Experts (MoE) are hypersensitive to delay, making InfiniBand the preferred choice over RoCE due to its superior handling of All-to-all communication.

- Co-Design Strategy: Achieving peak AI performance requires a “Network Co-Design” approach where high-speed hardware (400G IB) and software protocols (Packet Striping) are tightly integrated to minimize end-to-end latency.

#AINetworking #DataCenterArchitecture #InfiniBand #NCCL #LowLatency #HPC #GPUScaling #MoE #NetworkDesign #AIInfrastructure #DeepseekV3

WIth Gemini