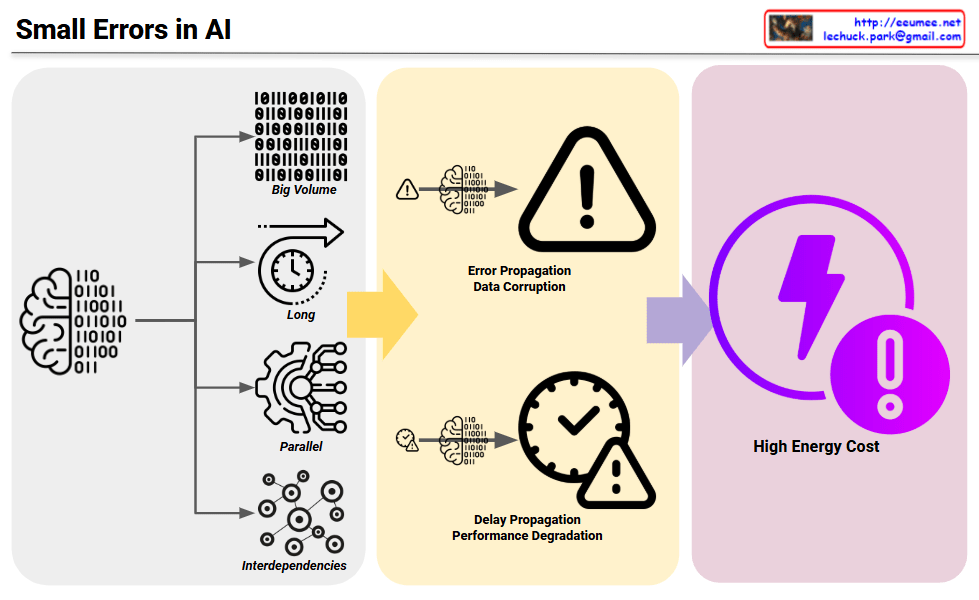

This diagram presents a systematic framework that defines the essence of AI LLMs as “Massive Simple Parallel Computing” and systematically outlines the resulting issues and challenges that need to be addressed.

Core Definition of AI LLM: “Massive Simple Parallel Computing”

Massive: Enormous scale with billions of parameters Simple: Fundamentally simple computational operations (matrix multiplications, etc.) Parallel: Architecture capable of simultaneous parallel processing Computing: All of this implemented through computational processes

Core Issues Arising from This Essential Nature

Big Issues:

- Black-box unexplainable: Incomprehensibility due to massive and complex interactions

- Energy-intensive: Enormous energy consumption inevitably arising from massive parallel computing

Essential Requirements Therefore Needed

Very Required:

- Verification: Methods to ensure reliability of results given the black-box characteristics

- Optimization: Approaches to simultaneously improve energy efficiency and performance

The Ultimate Question: “By What?”

How can we solve all these requirements?

In other words, this framework poses the fundamental question about specific solutions and approaches to overcome the problems inherent in the essential characteristics of current LLMs. This represents a compressed framework showing the core challenges for next-generation AI technology development.

The diagram effectively illustrates how the defining characteristics of LLMs directly lead to significant challenges, which in turn demand specific capabilities, ultimately raising the critical question of implementation methodology.

With Claude