Numbers about Cooling – System Analysis

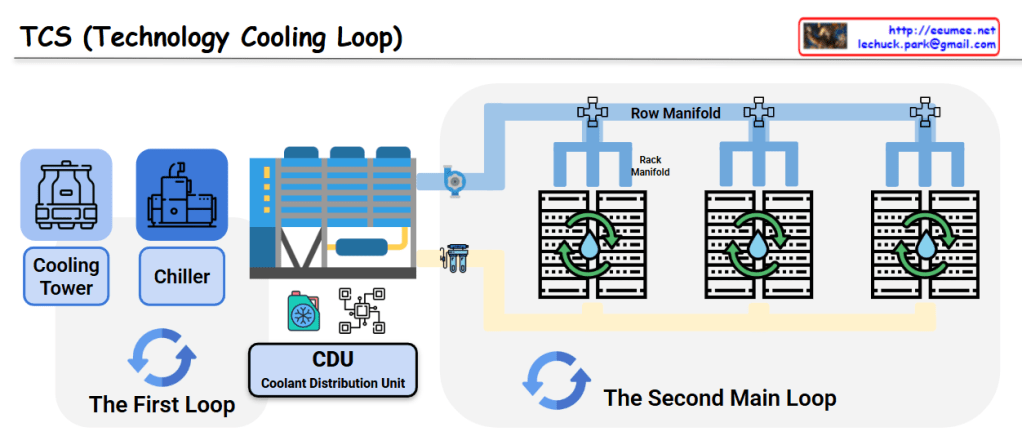

This diagram illustrates the thermodynamic principles and calculation methods for cooling systems, particularly relevant for data center and server room thermal management.

System Components

Left Side (Heat Generation)

- Power consumption device (Power kW)

- Time element (Time kWh)

- Heat-generating source (appears to be server/computer systems)

Right Side (Cooling)

- Cooling system (Cooling kW – Remove ‘Heat’)

- Cooling control system

- Coolant circulation system

Core Formula: Q = m×Cp×ΔT

Heat Generation Side (Red Box)

- Q: Heat flow rate (J/s) = Power (kW)

- V: Volumetric flow rate (m³/s)

- ρ: Air density (approximately 1.2 kg/m³)

- Cp: Specific heat capacity of air at constant pressure (approximately 1005 J/(kg·K))

- ΔT: Temperature change

Cooling Side (Blue Box)

- Q: Cooling capacity (kW)

- m: Coolant circulation rate (kg/s)

- Cp: Specific heat capacity of coolant (for water, approximately 4.2 kJ/kg·K)

- ΔT: Temperature change

System Operation Principle

- Heat generated by electronic equipment heats the air

- Heated air moves to the cooling system

- Circulating coolant absorbs the heat

- Cooling control system regulates flow rate or temperature

- Processed cool air recirculates back to the system

Key Design Considerations

The cooling control system monitors critical parameters such as:

- High flow rate vs. High temperature differential

- Optimal balance between energy efficiency and cooling effectiveness

- Heat load matching between generation and removal capacity

Summary

This diagram demonstrates the fundamental thermodynamic principles for cooling system design, where electrical power consumption directly translates to heat generation that must be removed by the cooling system. The key relationship Q = m×Cp×ΔT applies to both heat generation (air side) and heat removal (coolant side), enabling engineers to calculate required coolant flow rates and temperature differentials. Understanding these heat balance calculations is essential for efficient thermal management in data centers and server environments, ensuring optimal performance while minimizing energy consumption.