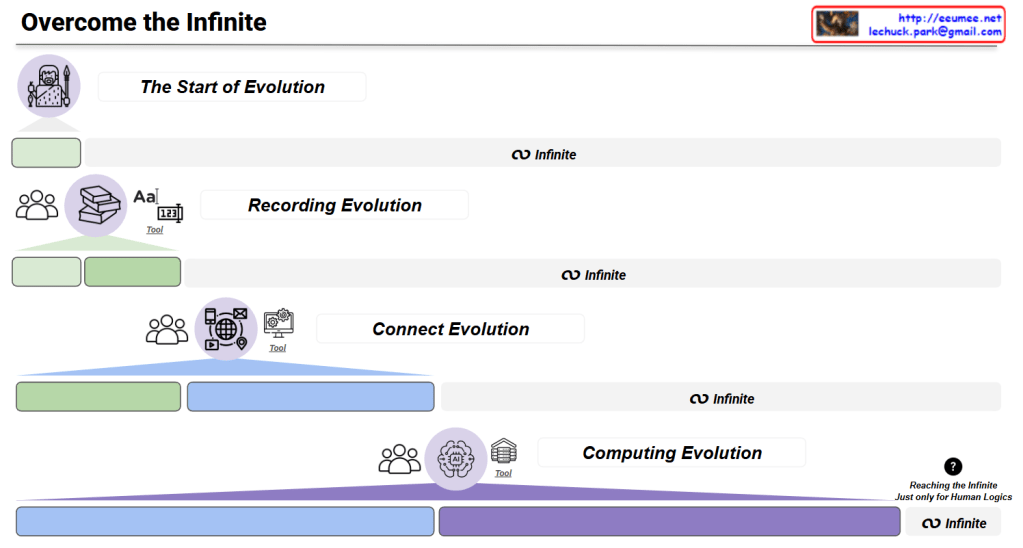

This updated image titled “Data?” presents a deeper philosophical perspective on data and AI.

Core Concept:

Human Perception is Limited

- Compared to the infinite complexity of the real world, the scope that humans can perceive and define is constrained

- The gray area labeled “Human perception is limited” visualizes this boundary of recognition

Two Dimensions of AI Application:

- Deterministic Data

- Data domains that humans have already defined and structured

- Contains clear rules and patterns that AI can process in predictable ways

- Represents traditional AI problem-solving approaches

- Non-deterministic Data

- Data from domains that humans haven’t fully defined

- Raw data from the real world with high uncertainty and complexity

- Areas where AI must discover and utilize patterns without prior human definitions

Key Insight: This diagram illustrates that AI’s true potential extends beyond simply solving pre-defined human problems. While AI can serve as a tool that opens new possibilities by transcending human cognitive boundaries and discovering complex patterns from the real world that we haven’t yet defined or understood, there remains a crucial human element in this process. Even as AI ventures into unexplored territories of reality beyond human-defined problem spaces, humans still play an essential role in determining how to interpret, validate, and responsibly apply these AI-discovered insights. The diagram suggests a collaborative relationship where AI expands our perceptual capabilities, but human judgment and decision-making remain fundamental in guiding how these expanded possibilities are understood and utilized.

With Claude