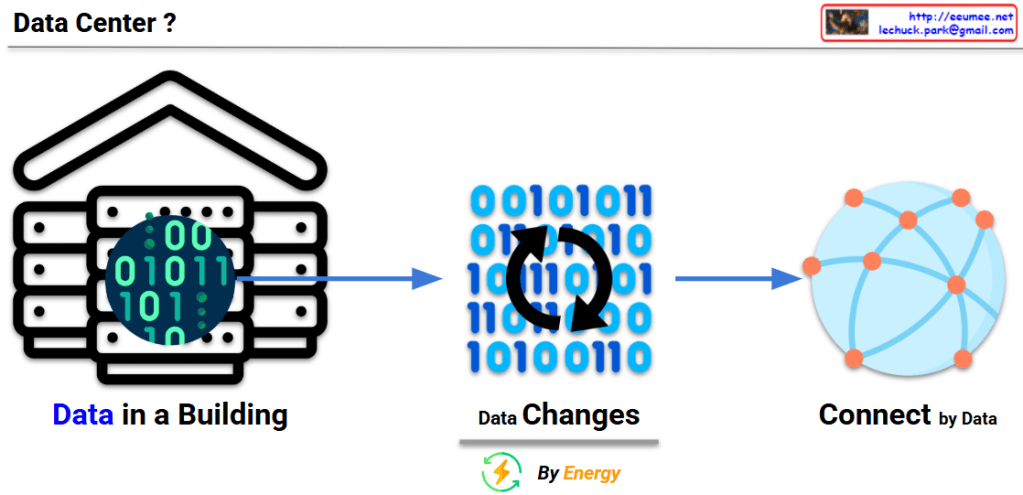

This image explains the fundamental concept and function of a data center:

- Left: “Data in a Building” – Illustrates a data center as a physical building that houses digital data (represented by binary code of 0s and 1s).

- Center: “Data Changes” – With the caption “By Energy,” showing how data is processed and transformed through the consumption of energy.

- Right: “Connect by Data” – Demonstrates how processed data from the data center connects to the outside world, particularly the internet, forming networks.

This diagram visualizes the essential definition of a data center – a physical building that stores data, consumes energy to process that data, and plays a crucial role in connecting this data to the external world through the internet.

With Claude