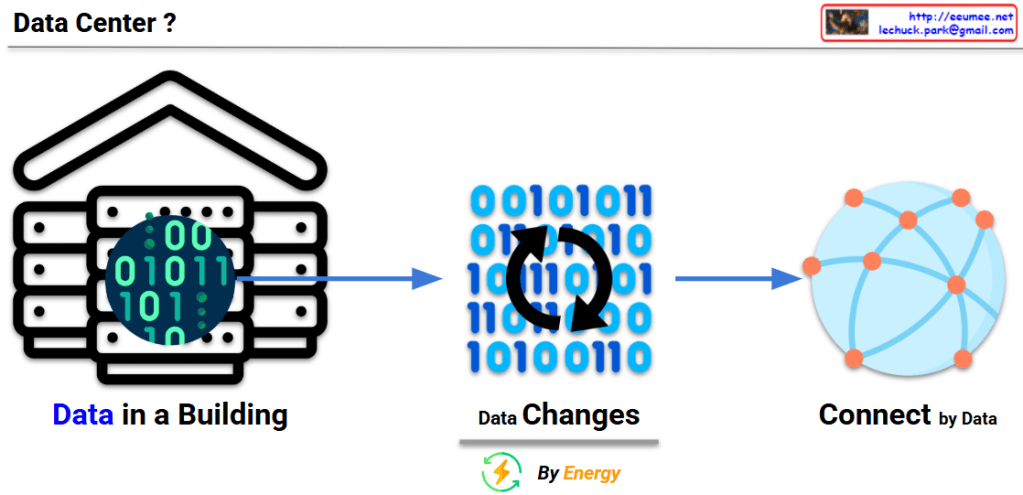

This image illustrates a comparison between two main data center architecture approaches: “Rack Cluster DC” and “Modular DC.”

On the left side, there are basic infrastructure elements depicted, representing power supply components (transformers, generators), cooling systems, and network equipment. On the right side, two different data center configuration methods are presented.

Rack Cluster Data Center (Left)

- Features: “Dense Computing, High Power and Cooling, Scaling Unit”

- Organized at the rack level within a cluster

- Shows structure connected by red solid and dotted lines

- Multiple server racks arranged in a regular pattern

Modular Data Center (Right)

- Features: “Modular Design, Flexible Scaling, Rapid Deployment”

- Organized at the module level, including power, cooling, and racks as integrated units

- Shows structure connected by blue solid and dotted lines

- Functional elements (power, cooling, servers) integrated into single modules

Both approaches display expansion units labeled “NEW” at the bottom, demonstrating the scalability of each approach.

This diagram visually compares the structural differences, scalability, and component arrangements between the traditional rack cluster approach and the modular approach to data center design.

With Claude