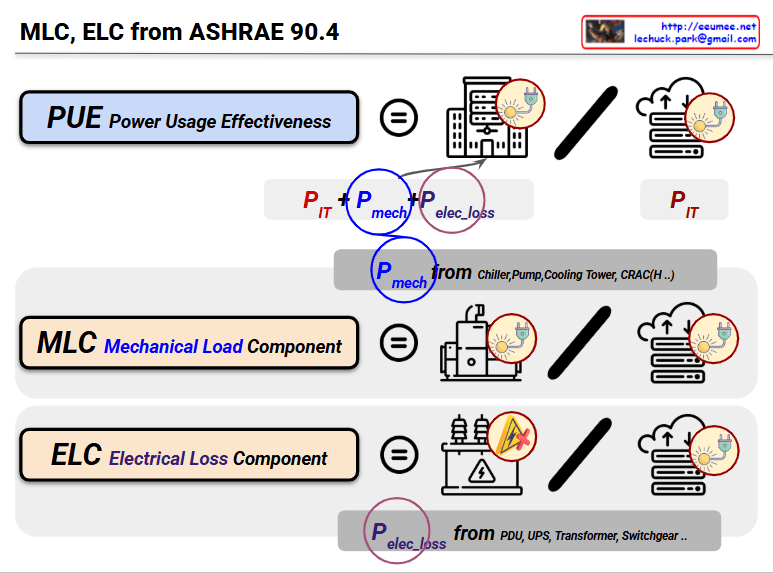

This image illustrates the concepts of PUE (Power Usage Effectiveness), MLC (Mechanical Load Component), and ELC (Electrical Loss Component) as defined in ASHRAE 90.4 standard.

Key Component Analysis:

1. PUE (Power Usage Effectiveness)

- A metric measuring data center power usage efficiency

- Formula: PUE = (P_IT + P_mech + P_elec_loss) / P_IT

- Total power consumption divided by IT equipment power

2. MLC (Mechanical Load Component)

- Ratio of mechanical load component to IT power

- Formula: MLC = P_mech / P_IT

- Represents how much power the cooling systems (chiller, pump, cooling tower, CRAC, etc.) consume relative to IT power

3. ELC (Electrical Loss Component)

- Ratio of electrical loss component to IT power

- Formula: ELC = P_elec_loss / P_IT

- Represents how much power is lost in electrical infrastructure (PDU, UPS, transformer, switchgear, etc.) relative to IT power

Diagram Structure:

Each component is connected as follows:

- Left: Component definition

- Center: Equipment icons (cooling systems, power systems, etc.)

- Right: IT equipment (server racks)

Necessity and Management Benefits:

These metrics are essential for optimizing power costs that constitute a significant portion of data center operating expenses, enabling identification of inefficient cooling and power system segments to reduce power costs and determine investment priorities.

This represents the ASHRAE standard methodology for systematically analyzing data center power efficiency and creating economic and environmental value through continuous improvement.

With Claude