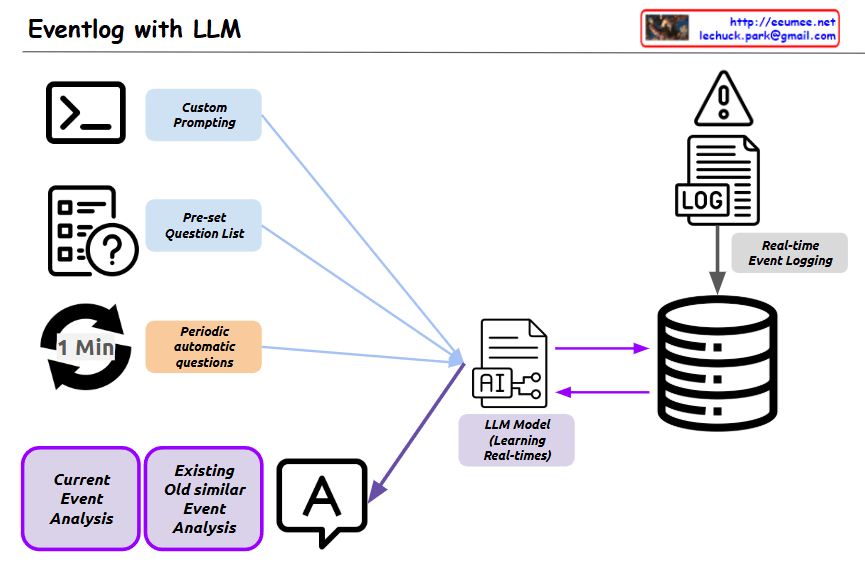

- Input methods (left side):

- A command line/terminal icon with “Custom Prompting”

- A questionnaire icon with “Pre-set Question List”

- A timer icon (1 Min) with “Periodic automatic questions”

- Processing (center):

- An “LLM Model” component labeled as “Learning Real-times”

- Database/storage components for “Real-time Event Logging”

- Output/Analysis (bottom):

- Two purple boxes for “Current Event Analysis” and “Existing Old similar Event Analysis”

- A text/chat bubble showing output

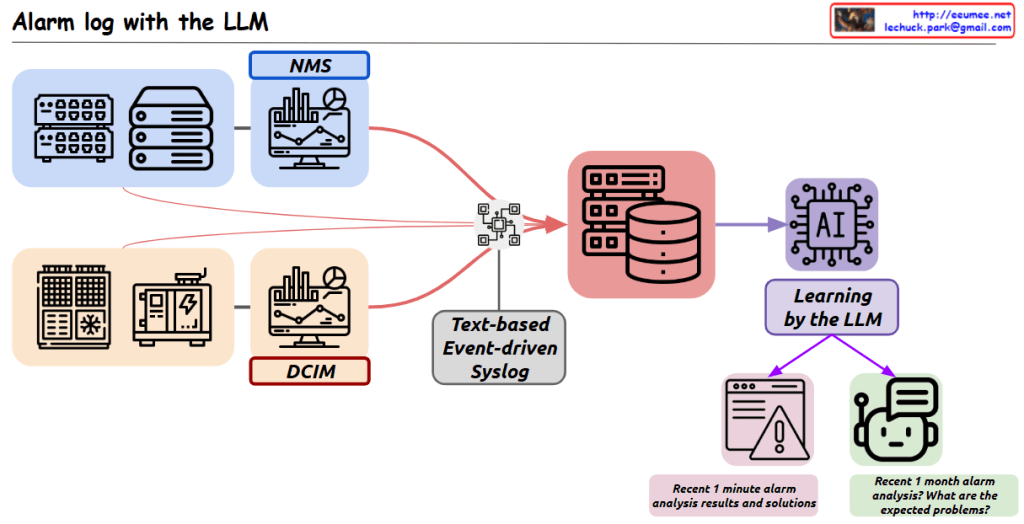

This system collects and updates unstructured text-based event logs in real-time, which are then learned by the LLM. Through user-input questions, predefined question lists, or periodically auto-generated questions, the system analyzes current events and compares them with similar past cases to provide comprehensive analytical results.

The primary purpose of this system is to efficiently process large volumes of event logs from increasingly large and complex IT infrastructure or business systems. This helps operators easily identify important events, make quick judgments, and take appropriate actions. By leveraging the natural language processing capabilities of LLMs, the system transforms complex log data into meaningful insights, significantly simplifying system monitoring and troubleshooting processes.

With Claude