With Claude

“A Framework for Value Analysis: From Single Value to Comprehensive Insights”

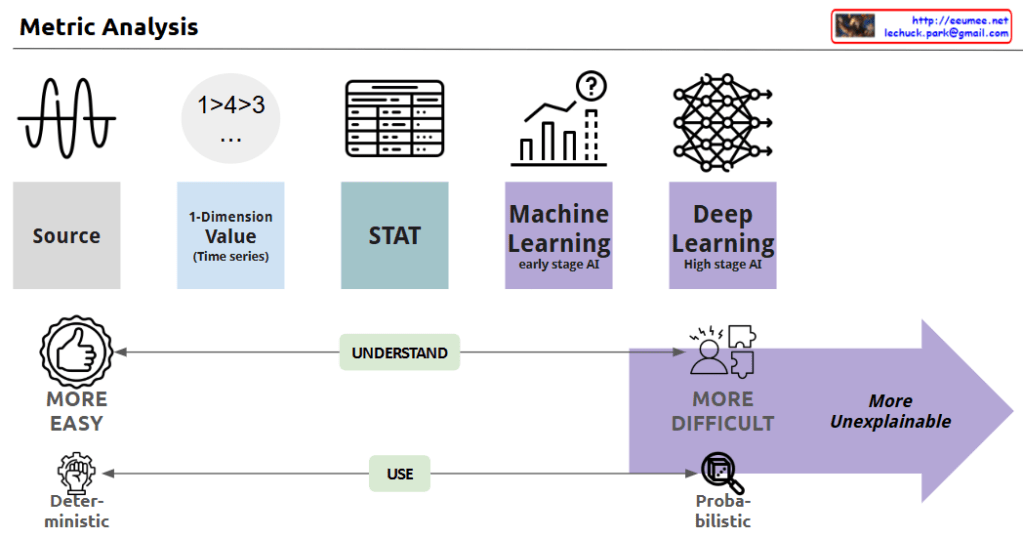

This diagram illustrates a sophisticated analytical framework that shows how a single value transforms through various analytical processes:

- Time Series Analysis Path:

- A single value evolves over time

- Changes occur through two mechanisms:

- Self-generated changes (By oneself)

- External influence-driven changes (By influence)

- These changes are quantified through a mathematical function f(x)

- Statistical measures (average, minimum, maximum, standard deviation) capture the characteristics of these changes

- Correlation Analysis Path:

- The same value is analyzed for relationships with other relevant data

- Weighted correlations indicate the strength and significance of relationships

- These relationships are also expressed through a mathematical function f(x)

- Integration and Machine Learning Stage:

- Both analyses (time series and correlation) feed into advanced analytics

- Machine Learning and Deep Learning algorithms process this dual-perspective data

- The final output produces either a single generalized value or multiple meaningful values

Core Purpose: The framework aims to take a single value and:

- Track its temporal evolution within a network of influences

- Analyze its statistical behavior through mathematical functions

- Identify weighted correlational relationships with other variables

- Ultimately synthesize these insights through ML/DL algorithms to generate either a unified understanding or multiple meaningful outputs

This systematic approach demonstrates how a single data point can be transformed into comprehensive insights by considering both its temporal dynamics and relational context, ultimately leveraging advanced analytics for meaningful interpretation.

The framework’s strength lies in its ability to combine temporal patterns, relational insights, and advanced analytics into a cohesive analytical approach, providing a more complete understanding of how values evolve and relate within a complex system.