DLSS is a graphics processing technology that consists of several key steps:

- Initial 3D Data

- The process begins with 3D model/data input

- Rendering Process

- Uses GPU to render 3D data into 2D screen output

- Notes that higher resolution rendering requires more computing power

- Low Resolution Stage

- Initially processes images at a lower resolution

- This helps conserve computing resources

- DLSS Processing

- Utilizes AI models and specialized hardware

- Employs deep learning technology to enhance image quality

- Combines lower computing requirements with AI processing

- Final Output

- Upscales the low resolution image to appear high resolution

- Delivers high-quality visual output that looks like native high resolution

The key advantage of DLSS is its ability to produce high-quality graphics while using less computing power. This technology is particularly valuable in applications requiring real-time rendering, such as gaming, where it can maintain visual quality while improving performance.

This innovative approach effectively balances the trade-off between visual quality and computational resources, making high-quality graphics more accessible on a wider range of hardware.

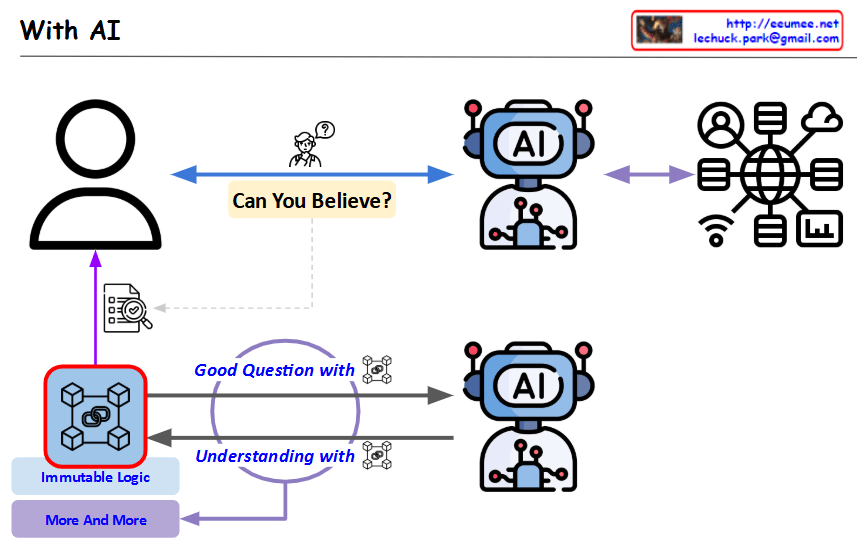

With Claude