Traditional CPU-GPU vs CXL Key Comparison

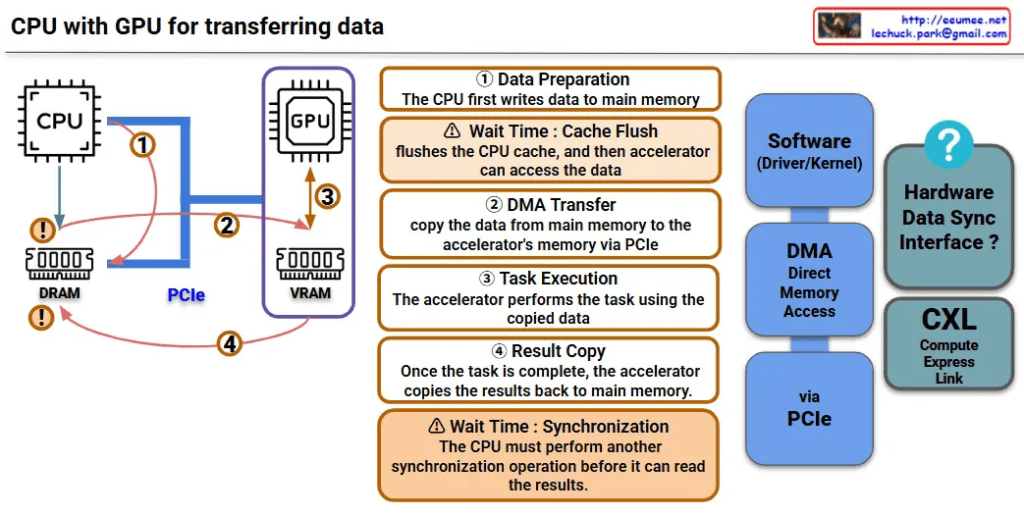

🔴 PCIe System Inefficiencies

Separated Memory Architecture

- Isolated Memory: CPU(DDR4) ↔ GPU(VRAM) completely separated

- Mandatory Data Copying: CPU Memory → PCIe → GPU Memory → Computation → Result Copy

- PCIe Bandwidth Bottleneck: Limited to 64GB/s maximum

Major Overheads

- Memory Copy Latency: Tens of ms to seconds for large data transfers

- Synchronization Wait: CPU cache flush + GPU synchronization

- Memory Duplication: Same data stored in both CPU and GPU memory

🟢 CXL Core Improvements

1. Unified Memory Architecture

Before: CPU [Memory] ←PCIe→ [Memory] GPU (Separated)

After: CPU ←CXL→ GPU → Shared Memory Pool (Unified)

2. Zero-Copy & Hardware Cache Coherency

- Eliminates Memory Copying: Data access through pointer sharing only

- Automatic Synchronization: CXL controller ensures cache coherency at HW level

- Real-time Sharing: GPU can immediately access CPU-modified data

3. Performance Improvements

| Metric | PCIe 4.0 | CXL 2.0 | Improvement |

|---|---|---|---|

| Bandwidth | 64 GB/s | 128 GB/s | 2x |

| Latency | 1-2μs | 200-400ns | 5-10x |

| Memory Copy | Required | Eliminated | Complete Removal |

🚀 Practical Benefits

AI/ML: 90% reduction in training data loading time, larger model processing capability

HPC: Real-time large dataset exchange, memory constraint elimination

Cloud: Maximized server resource efficiency through memory pooling

💡 CXL Core Innovations

- Zero-Copy Sharing – Eliminates physical data movement

- HW-based Coherency – Complete removal of software synchronization overhead

- Memory Virtualization – Scalable memory pool beyond physical constraints

- Heterogeneous Optimization – Seamless integration of CPU, GPU, FPGA, etc.

The key technical improvements of CXL – Zero-Copy sharing and hardware-based cache coherency – are emphasized as the most revolutionary aspects that fundamentally solve the traditional PCIe bottlenecks.

With Claude