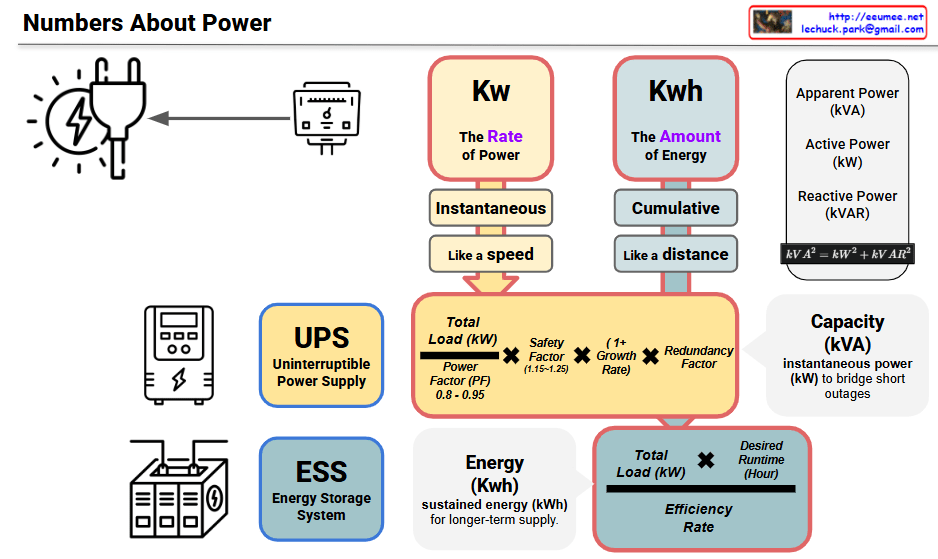

kW (Instantaneous Power) ↔ UPS (Uninterruptible Power Supply)

UPS Core Objective: Instantaneous Power Supply Capability

- kW represents the power needed “right now at this moment”

- UPS priority is immediate power supply during outages

- Like the “speed” concept in the image, UPS focuses on instantaneous power delivery speed

- Design actual kW capacity considering Power Factor (PF) 0.8-0.95

- Calculate total load (kW) reflecting safety factor, growth rate, and redundancy

kWh (Energy Capacity) ↔ ESS (Energy Storage System)

ESS Core Objective: Sustained Energy Supply Capability

- kWh indicates “how long” power can be supplied

- ESS priority is long-term stable power supply

- Like the “distance” concept in the image, ESS focuses on power supply duration

- Required ESS capacity = Total Load (kW) × Desired Runtime (Hours)

- Design actual storage capacity considering efficiency rate

Complementary Operation Strategy

Phase 1: UPS Immediate Response

- Power outage → UPS immediately supplies power in kW units

- Short-term power supply for minutes to tens of minutes

Phase 2: ESS Long-term Support

- Extended outages → ESS provides sustained power in kWh units

- Long-term power supply for hours to days

Summary: This structure optimally matches kW (instantaneousness) with UPS strengths and kWh (sustainability) with ESS capabilities. UPS handles immediate power needs while ESS ensures long-duration supply, creating a comprehensive power backup solution.

With Claude