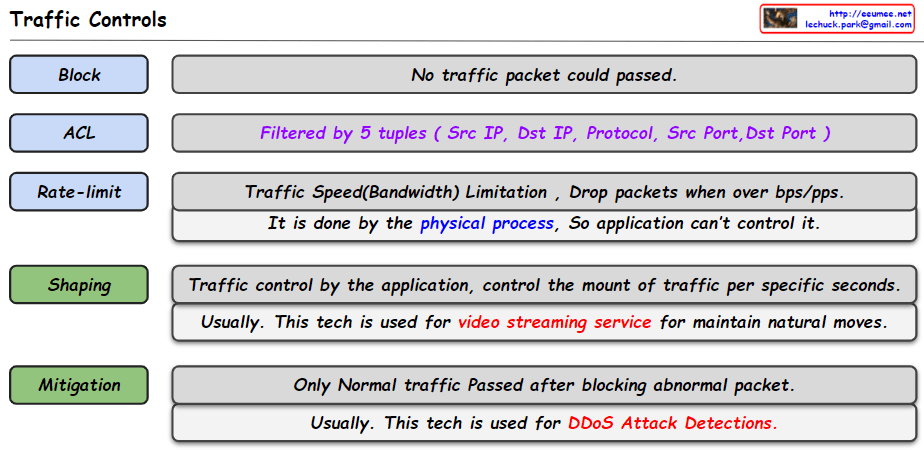

This image shows a network traffic control system architecture. Here’s a detailed breakdown:

- At the top, several key technologies are listed:

- P4 (Programming Protocol-Independent Packet Processors)

- eBPF (Extended Berkeley Packet Filter)

- SDN (Software-Defined Networking)

- DPI (Deep Packet Inspection)

- NetFlow/sFlow/IPFIX

- AI/ML-Based Traffic Analysis

- The system architecture is divided into main sections:

- Traffic flow through IN PORT and OUT PORT

- Routing based on Destination IP address

- Inside TCP/IP and over TCP/IP sections

- Security-Related Conditions

- Analysis

- AI/ML-Based Traffic Analysis

- Detailed features:

- Inside TCP/IP: TCP/UDP Flags, IP TOS (Type of Service), VLAN Tags, MPLS Labels

- Over TCP/IP: HTTP/HTTPS Headers, DNS Queries, TLS/SSL Information, API Endpoints

- Security-Related: Malicious Traffic Patterns, Encryption Status

- Analysis: Time-Based Conditions, Traffic Patterns, Network State Information

- The AI/ML-Based Traffic Analysis section shows:

- AI/ML technologies learn traffic patterns

- Detection of anomalies

- Traffic control based on specific conditions

This diagram represents a comprehensive approach to modern network monitoring and control, integrating traditional networking technologies with advanced AI/ML capabilities. The system shows a complete flow from packet ingress to analysis, incorporating various layers of inspection and control mechanisms.

with Claude