From Claude with some prompting

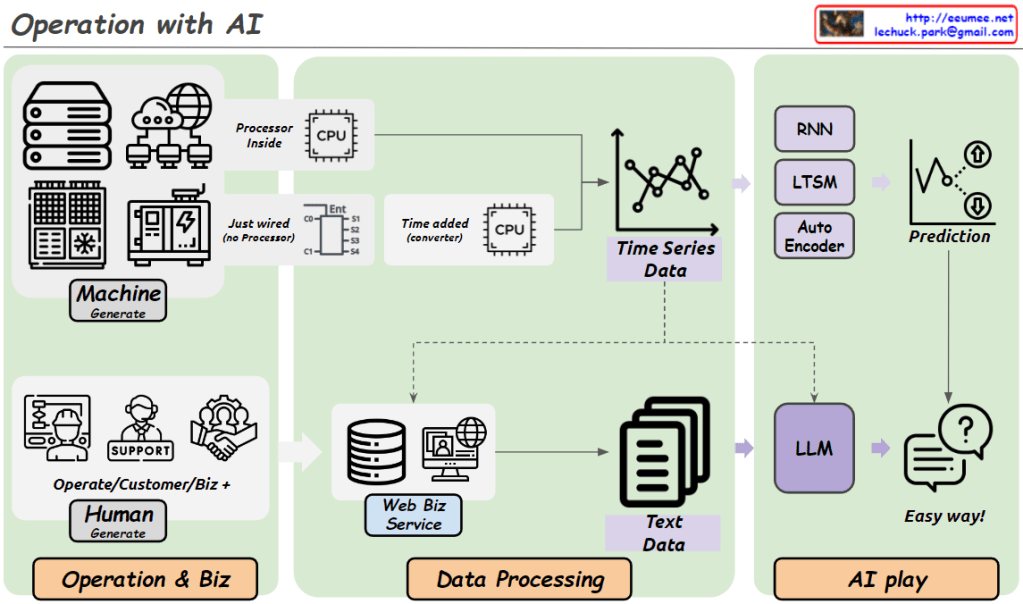

- Raw Time Series Data:

- Data Source: Sensors or meters operating 24/7, 365 days a year

- Components: a. Point: The data point being measured b. Metric: The measurement value for each point c. Time: When the data was recorded

- Format: (Point, Value, Time)

- Additional Information: a. Config Data: Device name, location, and other setup information b. Tag Info: Additional metadata or classification information for the data

- Characteristics:

- Continuously updated based on status changes

- Automatically changes over time

- Processed Time Series Data (2nd logical Data):

- Processing Steps: a. ETL (Extract, Transform, Load) operations b. Analysis of correlations between data points (Point A and Point B) c. Data processing through f(x) function

- Creating formulas through correlations using experience and AI learning

- Result:

- Generation of new data points

- Includes original point, related metric, and time information

- Characteristics:

- Provides more meaningful and correlated information than raw data

- Reflects relationships and influences between data points

- Usable for more complex analysis and predictions

- Processing Steps: a. ETL (Extract, Transform, Load) operations b. Analysis of correlations between data points (Point A and Point B) c. Data processing through f(x) function

Through this process, Raw Time Series Data is transformed into more useful and insightful Processed Time Series Data. This aids in understanding data patterns and predicting future trends.