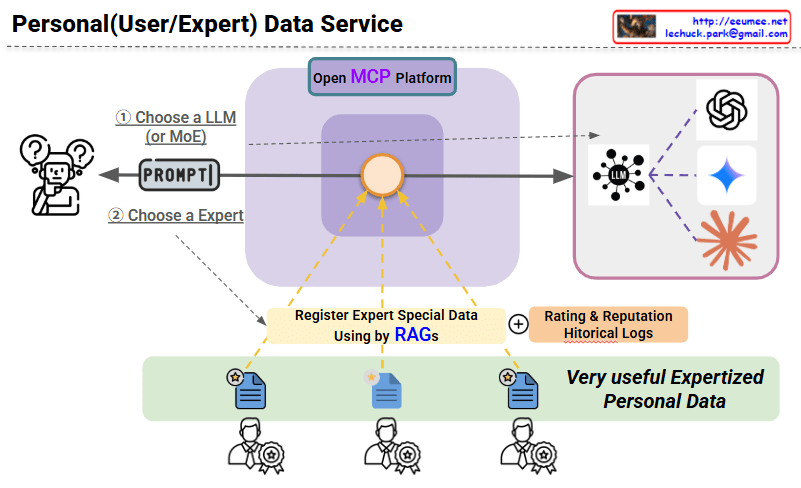

System Overview

The Personal Data Service is an open expert RAG service platform based on MCP (Model Context Protocol). This system creates a bidirectional ecosystem where both users and experts can benefit mutually, enhancing accessibility to specialized knowledge and improving AI service quality.

Core Components

1. User Interface (Left Side)

- LLM Model Selection: Users can choose their preferred language model or MoE (Mixture of Experts)

- Expert Selection: Select domain-specific experts for customized responses

- Prompt Input: Enter specific questions or requests

2. Open MCP Platform (Center)

- Integrated Management Hub: Connects and coordinates all system components

- Request Processing: Matches user requests with appropriate expert RAG systems

- Service Orchestration: Manages and optimizes the entire workflow

3. LLM Service Layer (Right Side)

- Multi-LLM Support: Integration with various AI model services

- OAuth Authentication: Direct user selection of paid/free services

- Vendor Neutrality: Open architecture independent of specific AI services

4. Expert RAG Ecosystem (Bottom)

- Specialized Data Registration: Building expert-specific knowledge databases through RAG

- Quality Management System: Ensuring reliability through evaluation and reputation management

- Historical Logs: Continuous quality improvement through service usage records

Key Features

- Bidirectional Ecosystem: Users obtain expert answers while experts monetize their knowledge

- Open Architecture: Scalable platform based on MCP standards

- Quality Assurance: Expert and answer quality management through evaluation systems

- Flexible Integration: Compatibility with various LLM services

- Autonomous Operation: Direct data management and updates by experts

With Claude