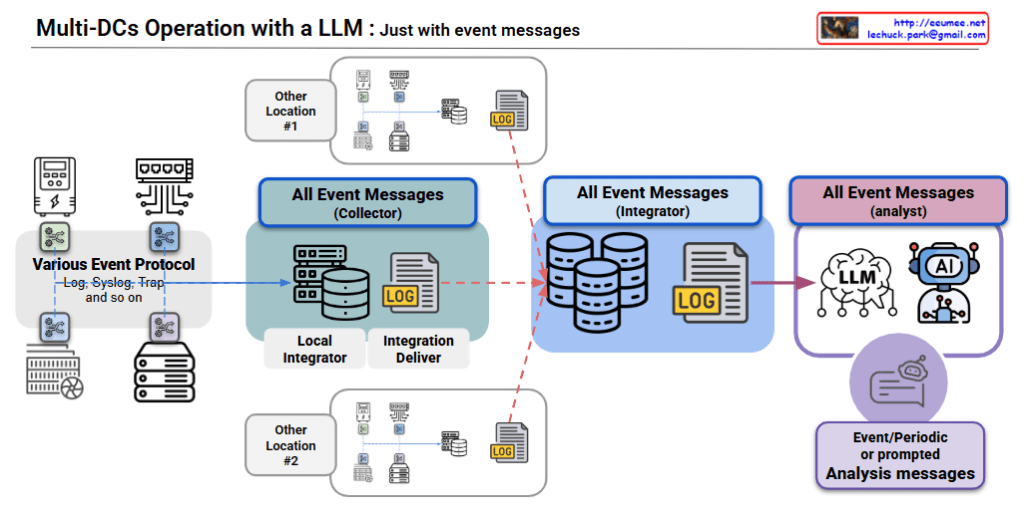

This diagram illustrates a Multi-Data Center Operation with LLM architecture system configuration.

Overall Architecture Components

Left Side – Event Sources:

- Various systems supporting different event protocols (Log, Syslog, Trap, etc.) generating events

Middle – 3-Stage Processing Pipeline:

- Collector – Light Blue

- Composed of Local Integrator and Integration Deliver

- Collects and performs initial processing of all event messages

- Integrator – Dark Blue

- Stores/manages event messages in databases and log files

- Handles data integration and normalization

- Analyst – Purple

- Utilizes LLM and AI for event analysis

- Generates event/periodic or immediate analysis messages

Core Efficiency of LLM Operations Integration (Bottom 4 Features)

- Already Installed: Leverages pre-analyzed logical results from existing alert/event systems, enabling immediate deployment without additional infrastructure

- Highly Reliable: Alert messages are highly deterministic data that significantly reduce LLM error possibilities and ensure stable analysis results

- Easy Integration: Uses pre-structured alert messages, allowing simple integration with various systems without complex data preprocessing

- Nice LLM: Operates reliably based on verified alert data and provides an optimal strategy for rapidly applying advanced LLM technology

Summary

This architecture enables rapid deployment of advanced LLM technology by leveraging existing alert infrastructure as high-quality, deterministic input data. The approach minimizes AI-related risks while maximizing operational intelligence, offering immediate deployment with proven reliability.

With Claude