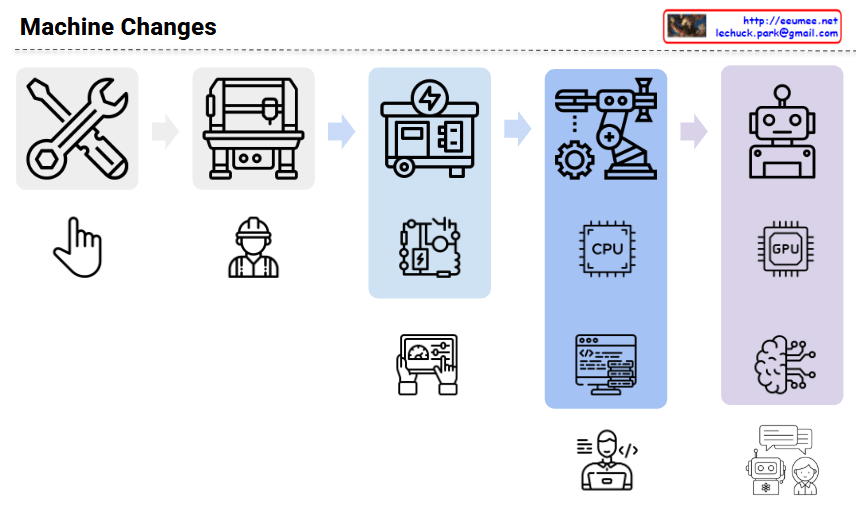

This image titled “Machine Changes” visually illustrates the evolution of technology and machinery across different eras.

The diagram progresses from left to right with arrows showing the developmental stages:

Stage 1 (Left): Manual Labor Era

- Tool icons (wrench, spanner)

- Hand icon

- Worker icon Representing basic manual work using simple tools.

Stage 2: Mechanization Era

- Manufacturing equipment and machinery

- Power-driven machines Depicting the industrial revolution period with mechanized production.

Stage 3 (Blue section): Automation and Computer Era

- Power supply systems

- CPU/processor chips

- Computer systems

- Programming code Representing automation through electronics and computer technology.

Stage 4 (Purple section): AI and Smart Technology Era

- Robots

- GPU processors

- Artificial brain/AI

- Interactive interfaces Representing modern smart technology integrated with artificial intelligence and robotics.

Additional Insight: The transition from the CPU era to the GPU era marks a fundamental shift in what drives technological capability. In the CPU era, program logic was the critical factor – the sophistication of algorithms and code determined system performance. However, in the GPU era, training data has become paramount – the quality, quantity, and diversity of data used to train AI models now determines the intelligence and effectiveness of these systems. This represents a shift from logic-driven computation to data-driven learning.

Overall, this infographic captures humanity’s technological evolution: Manual Labor → Mechanization → Automation → AI/Robotics, highlighting how the foundation of technological advancement has evolved from human skill to mechanical power to programmed logic to data-driven intelligence.

With Claude