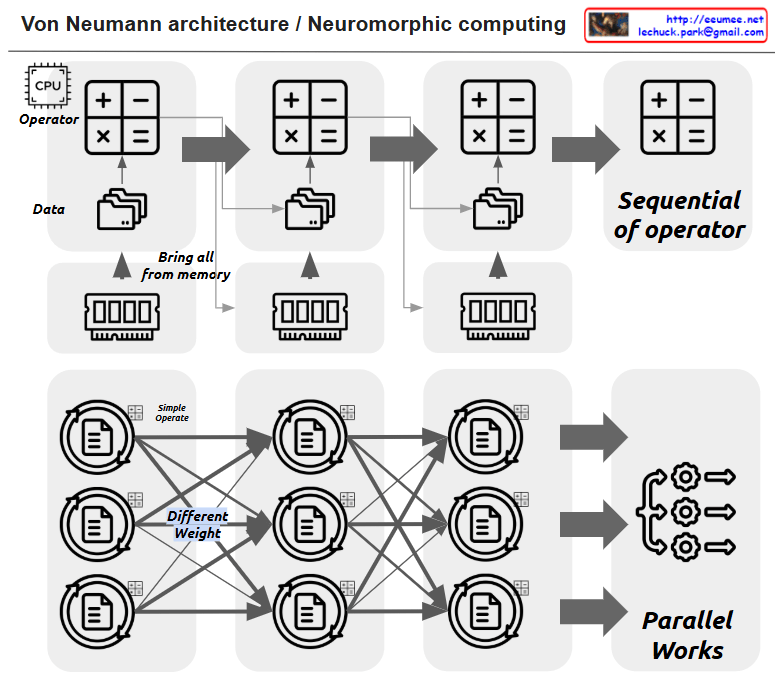

Digital Twin Concept

A Digital Twin is composed of three key elements:

- High Precision Data: Exact, structured numerical data

- Real 3D Model: Visual representation that is easy to comprehend

- History/Prediction Simulation: Temporal analysis capabilities

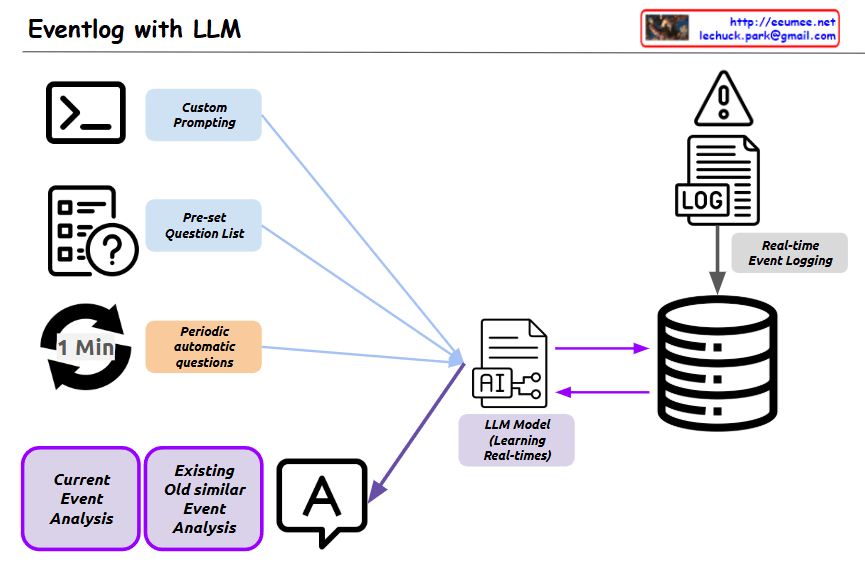

LLM Approach

Large Language Models expand on the Digital Twin concept with:

- Enormous Unstructured Data: Ability to incorporate and process diverse, non-structured information

- Text-based Interface: Making analysis more accessible through natural language rather than requiring visual interpretation

- Enhanced Simulation: Improved predictive capabilities leveraging more comprehensive datasets

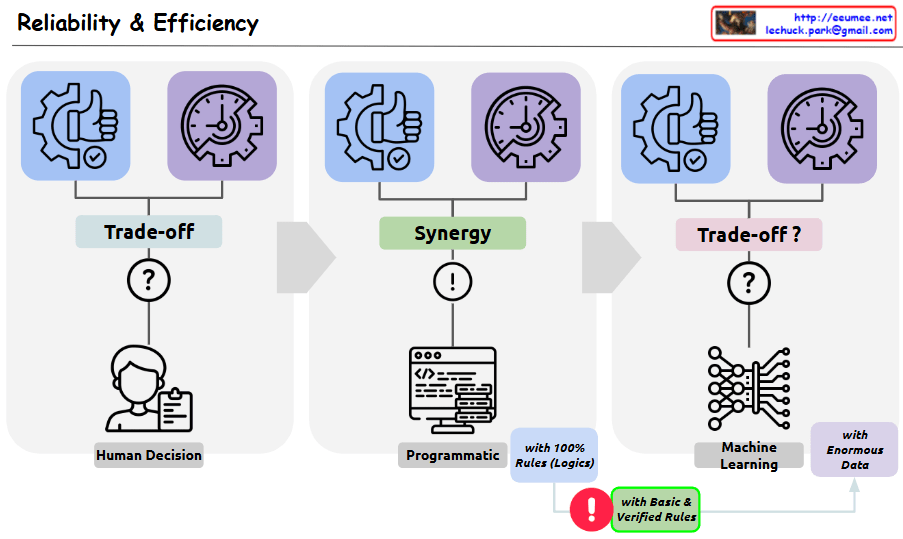

Key Advantages of LLM over Traditional Digital Twin

- Data Flexibility: LLMs can handle both structured and unstructured data, expanding beyond the limitations of traditional Digital Twins

- Accessibility: Text-based interfaces lower the barrier to understanding complex analyses

- Implementation Efficiency: Recent advances in LLM and GPU technologies make these solutions more practical to implement than complex Digital Twin systems

- Practical Application: LLMs offer a more approachable alternative while maintaining the core benefits of Digital Twin concepts

This comparison illustrates how LLMs can serve as an evolution of Digital Twin technology, providing similar benefits through more accessible means and potentially expanding capabilities through their ability to process diverse data types.

With Claude