with a claude’s help

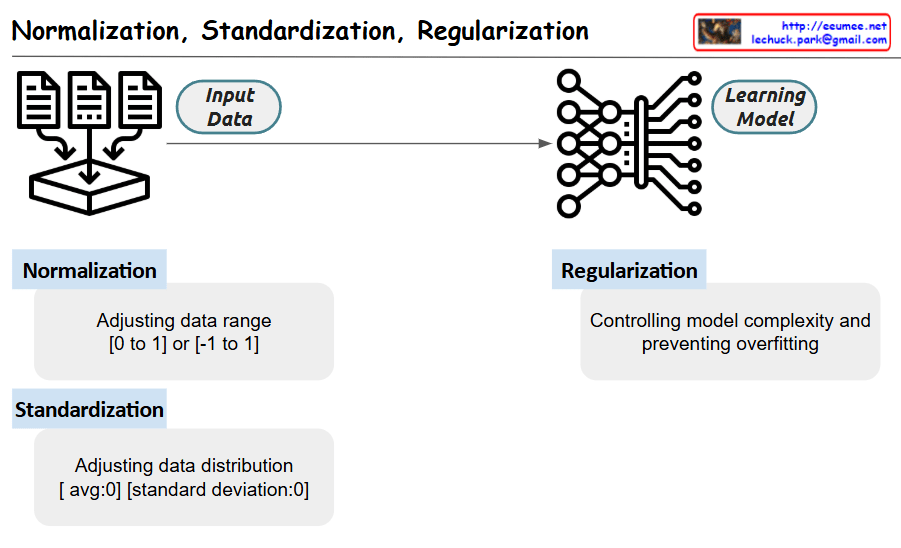

This image is a diagram explaining three important concepts in machine learning: Normalization, Standardization, and Regularization.

The diagram is structured as follows:

- On the left side, there are document icons representing Input Data, and on the right side, there is a neural network structure representing the Learning Model.

Each concept is explained:

- Normalization:

- Process of adjusting data range to [0 to 1] or [-1 to 1]

- Scales data to fit within a specific range

- Standardization:

- Process of adjusting data distribution

- Transforms data to have an average of 0 and standard deviation of 0

- Regularization:

- Controls model complexity and prevents overfitting

- Prevents the model from becoming too closely fitted to the training data

These techniques are essential preprocessing and training steps for improving machine learning model performance and ensuring stable learning.

These techniques are fundamental in machine learning as they help in:

Enhancing overall model performance

Making data consistent and comparable

Improving model training efficiency

Preventing model overfitting