From Claude + ChatGPT with some prompting

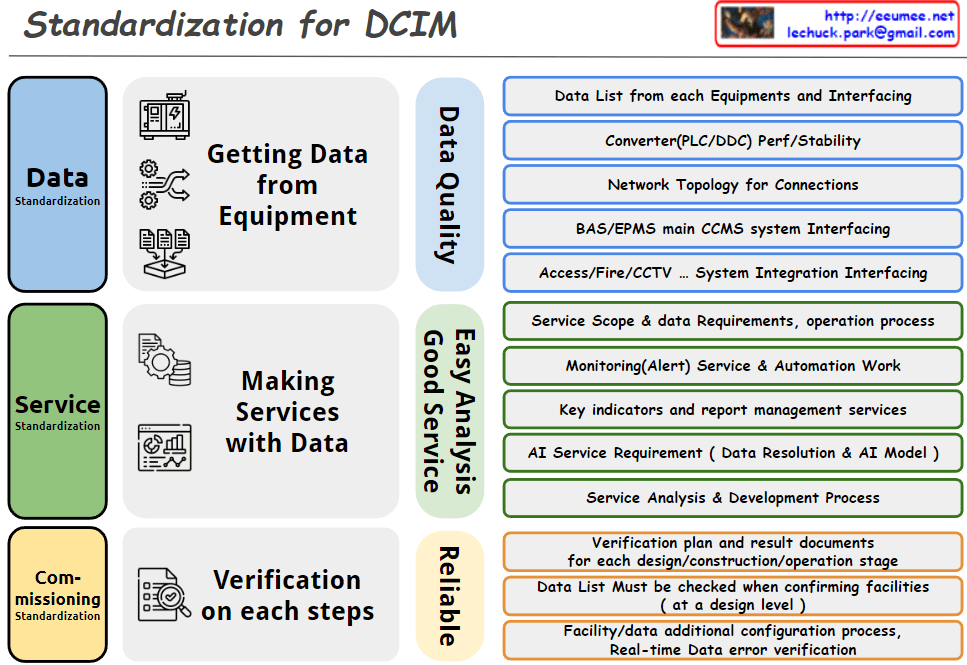

The image you provided shows a standardization process aimed at delivering high-quality data and consistent services. Here’s a breakdown of the structure based on the image:

Key Areas:

- [Data]

- Facility: Represents physical systems or infrastructure.

- Auto Control: Automatic controls used to manage the system.

- [Service]

- Mgt. System: Management system that controls and monitors operations.

- Process: Processes to maintain efficiency and quality.

Optimization Paths:

- Legacy Optimization:

- a) Configure List-Up: Listing and organizing the configurations for the existing system.

- b) Configure Optimization (Standardization): Optimizing and standardizing the existing system to improve performance.

- Outcome: Enhances the existing system by improving its efficiency and consistency.

- New Setup:

- a) Configure List-Up: Listing and organizing configurations for the new system.

- b) Configure Optimization (Standardization): Optimizing and standardizing the configuration for the new system.

- c) Configuration Requirement: Defining the specific requirements for setting up the new system.

- d) Verification (on Installation): Verifying that the system operates correctly after installation.

- Outcome: Builds a completely new system that provides high-quality data and consistent services.

Outcome:

The aim for both paths is to provide high-quality data and consistent service by standardizing either through optimizing legacy systems or creating entirely new setups.

This structured approach helps improve efficiency, consistency, and system performance.