With Claude

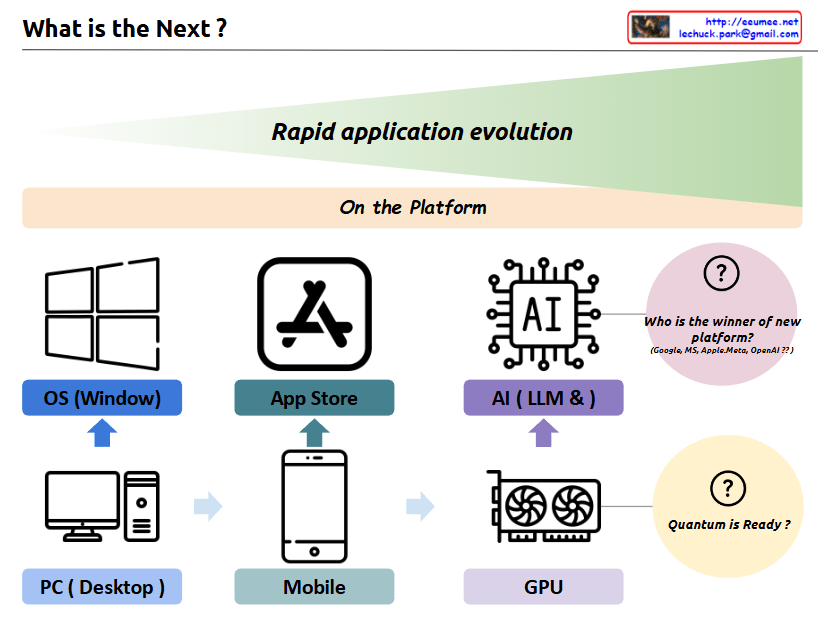

a comprehensive interpretation of the image and its concept of “Rapid application evolution”:

The diagram illustrates the parallel evolution of both hardware infrastructure and software platforms, which has driven rapid application development and user experiences:

- Hardware Infrastructure Evolution:

- PC/Desktop → Mobile Devices → GPU

- Represents the progression of core computing power platforms

- Each transition brought fundamental changes in how users interact with technology

- Software Platform Evolution:

- Windows OS → App Store → AI/LLM

- Shows the evolution of application ecosystems

- Each platform created new possibilities for user applications

The symbiotic relationship between these two axes:

- PC Era: Integration of PC hardware with Windows OS

- Mobile Era: Combination of mobile devices with app store ecosystems

- AI Era: Marriage of GPU infrastructure with LLM/AI platforms

Each transition has led to exponential growth in application capabilities and user experiences, with hardware and software platforms developing in parallel and reinforcing each other.

Future Outlook:

- “Who is the winner of new platform?”

- Current competition between Google, MS, Apple/Meta, OpenAI

- Platform leadership in the AI era remains undecided

- Possibility for new players to emerge

- “Quantum is Ready?”

- Suggests quantum computing as the next potential hardware revolution

- Implies the possibility of new software platforms emerging to leverage quantum capabilities

- Continues the pattern of hardware-software co-evolution

This cyclical pattern of hardware-software evolution suggests that we’ll continue to see new infrastructure innovations driving platform development, and vice versa. Each cycle has dramatically expanded the possibilities for applications and user experiences, and this trend is likely to continue with future technological breakthroughs.

The key insight is that major technological leaps happen when both hardware infrastructure and software platforms evolve together, creating new opportunities for application development and user experiences that weren’t previously possible.