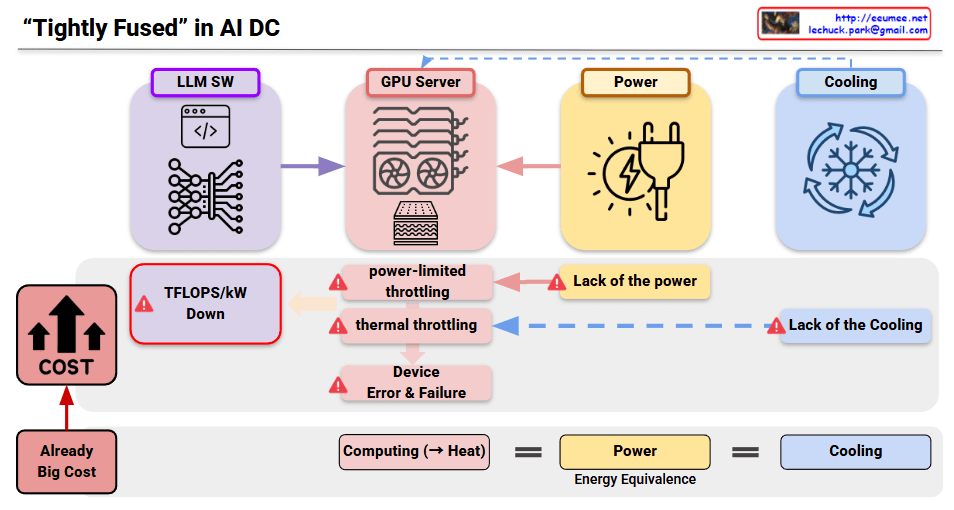

This diagram illustrates a “Tightly Fused” AI datacenter architecture showing the interdependencies between system components and their failure points.

System Components

- LLM SW: Large Language Model Software

- GPU Server: Computing infrastructure with cooling fans

- Power: Electrical power supply system

- Cooling: Thermal management system

Critical Issues

1. Power Constraints

- Lack of power leads to power-limited throttling in GPU servers

- Results in decreased TFLOPS/kW (computational efficiency per watt)

2. Cooling Limitations

- Insufficient cooling causes thermal throttling

- Increases risk of device errors and failures

3. Cost Escalation

- Already high baseline costs

- System bottlenecks drive costs even higher

Core Principle

The bottom equation demonstrates the fundamental relationship: Computing (→ Heat) = Power = Cooling

This shows that computational workload generates heat, requiring equivalent power supply and cooling capacity to maintain optimal performance.

Summary

This diagram highlights how AI datacenters require perfect balance between computing, power, and cooling systems – any bottleneck in one area cascades into performance degradation and cost increases across the entire infrastructure.

#AIDatacenter #MLInfrastructure #GPUComputing #DataCenterDesign #AIInfrastructure #ThermalManagement #PowerEfficiency #ScalableAI #HPC #CloudInfrastructure #AIHardware #SystemArchitecture

With Claude