From Claude with some prompting

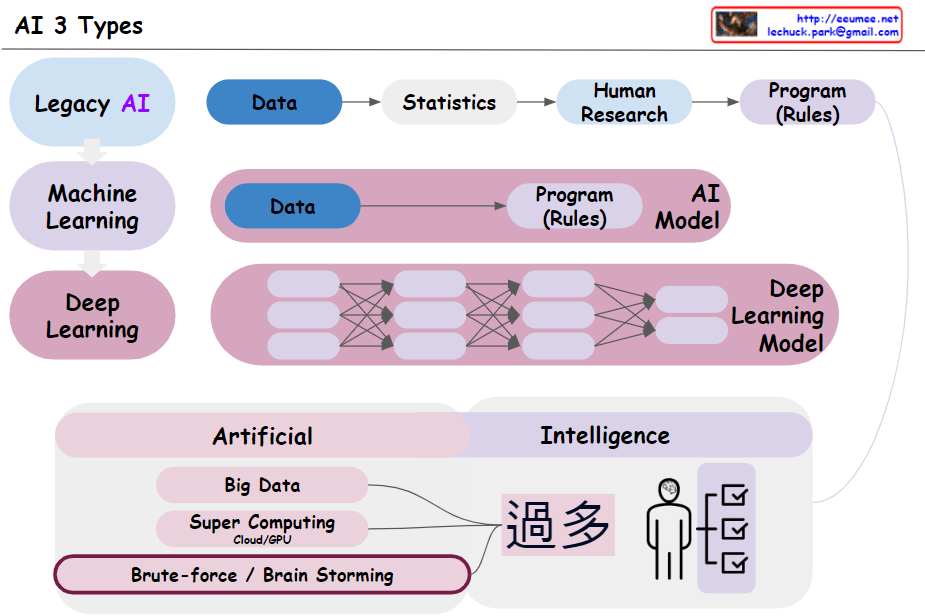

This image illustrates the process of creating a “human-like AI” through the stages of “Big Data -> Learning -> Good AI.”

The first stage is “Big Data,” which serves as the foundation for AI training. It requires collecting vast amounts of accurate and reliable data from various sources.

The second stage is “Learning,” where the big data is fed into deep learning algorithms and neural network models for training. This process requires immense computing power and optimized AI models.

The third stage yields a “Good AI” capable of tasks like data classification and processing as a result of the learning process.

However, the image suggests that the goal goes beyond creating an AI with “Many Numbers” and “Classification” abilities. The ultimate aim is to develop an AI that reaches “Human-Like” levels of intelligence and capability.

Crucially, the entire process is underpinned by the availability of “Accurate & Reliable DATA.” No matter how advanced the algorithms and computing power, if the data itself lacks quality and trustworthiness, achieving a truly “Human-Like AI” will be extremely challenging.

Therefore, the key message conveyed by this image is that the quality and reliability of data will be the critical factor determining the competitiveness of AI systems in the future. Securing accurate and trustworthy data is emphasized as the fundamental requirement for realizing human-level artificial intelligence.