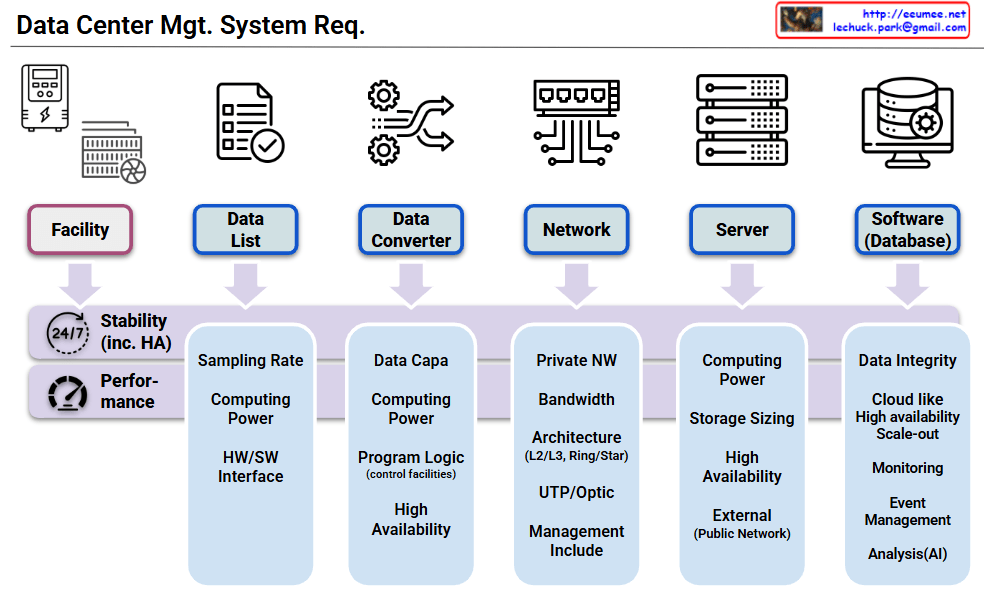

System Components (Top Level)

Six core components:

- Facility: Data center physical infrastructure

- Data List: Data management and cataloging

- Data Converter: Data format conversion

- Network: Network infrastructure

- Server: Server hardware

- Software (Database): Applications and database systems

Universal Mandatory Requirements

Fundamental requirements applied to ALL components:

- Stability (24/7 HA): 24/7 High Availability – All systems must operate continuously without interruption

- Performance: Optimal performance assurance – All components must meet required performance levels

Component-Specific Additional Requirements

1. Data List

- Sampling Rate, Computing Power, HW/SW Interface

2. Data Converter

- Data Capacity, Computing Power, Program Logic (control facilities), High Availability

3. Network

- Private NW, Bandwidth, Architecture (L2/L3, Ring/Star), UTP/Optic, Management Include

4. Server

- Computing Power, Storage Sizing, High Availability, External (Public Network)

5. Software/Database

- Data Integrity, Cloud-like High Availability & Scale-out, Monitoring, Event Management, Analysis (AI)

This architecture emphasizes that stability and performance are fundamental prerequisites for data center operations, with each component having its own specific additional requirements built upon these two essential foundation requirements.

With Claude