From Perplexity with some prompting

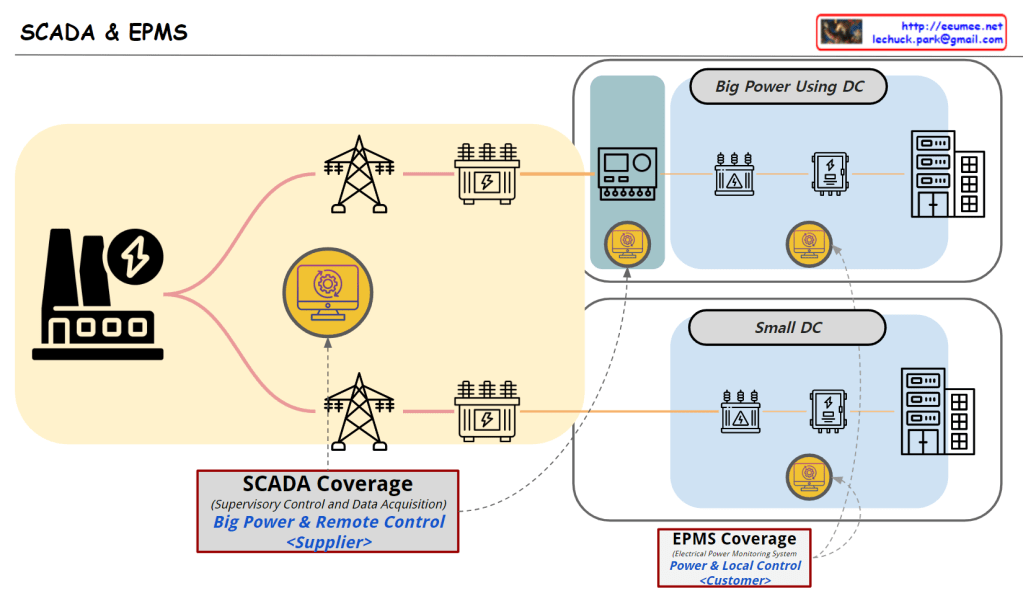

The image illustrates the roles and coverage of SCADA and EPMS systems in power management for data centers.

SCADA System

- Target: Power Suppliers and Large Power Consumers (Big Power Using DC)

- Role:

- Power Suppliers: Remotely monitor and control infrastructure like power plants and substations to ensure the stability of large-scale power grids.

- Large Data Centers: Manage complex power infrastructure and ensure stable power supply by utilizing some SCADA functionalities.

- Coverage: Large power management and remote control

EPMS System

- Target: Small Data Centers (Small DC)

- Role:

- Monitor and manage power usage within the data center to optimize energy efficiency.

- Perform detailed local control of power management.

- Coverage: Power monitoring and local control

Key Distinctions

- SCADA focuses on large-scale power management and remote control, suitable for power suppliers and large consumers.

- EPMS is used primarily in small data centers for optimizing energy consumption through local control.

In conclusion, large data centers benefit from using both SCADA and EPMS to effectively manage complex power infrastructures, while small data centers typically rely on EPMS for efficient energy management.