One Summer day

The Computing for the Fair Human Life.

From Claude with some prompting

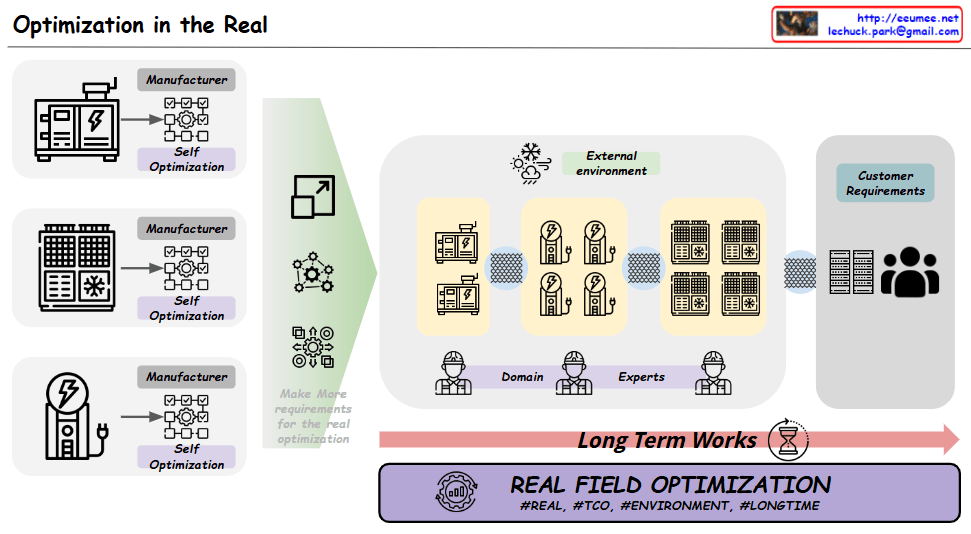

The Real Field Optimization diagram and its extended implications:

This comprehensive optimization approach goes beyond individual equipment efficiency, aiming for sustainable operation and value creation of the entire system. This can be achieved through continuous improvement activities based on real operational environment data. This represents the true meaning of “Real Field Optimization” with its hashtags #REAL, #TCO, #ENVIRONMENT, #LONGTIME.

The diagram effectively illustrates that while equipment-level optimization is fundamental, the real challenge and opportunity lie in optimizing the entire operational ecosystem over time, considering all stakeholders, environmental factors, and long-term sustainability. The implicit need for data-driven optimization in real operating environments becomes crucial for achieving these comprehensive optimization goals.

From Claude with some prompting

This distributed system architecture can be broadly divided into five core areas:

1. CAP Theory-Based System Structure

2. Data Replication Strategies

3. Scalability Patterns

4. Partition Tolerance

5. Fault Tolerance Mechanisms

Trade-off Management:

Service-Specific Approach:

Data Management:

System Stability:

These elements should be implemented in an integrated manner, considering their interconnections in distributed system design. Finding the right balance according to business requirements is essential.

From Claude with some prompting

the key points from the diagram:

This structure suggests that while base LLM technology might be dominated by a few players, enterprises can maintain competitive advantage through their unique private data assets and specialized implementations using RAG-like technologies.

This creates a market where companies can differentiate themselves even while using the same foundation models, by leveraging their proprietary data and specific use-case implementations.

From Claude with some prompting

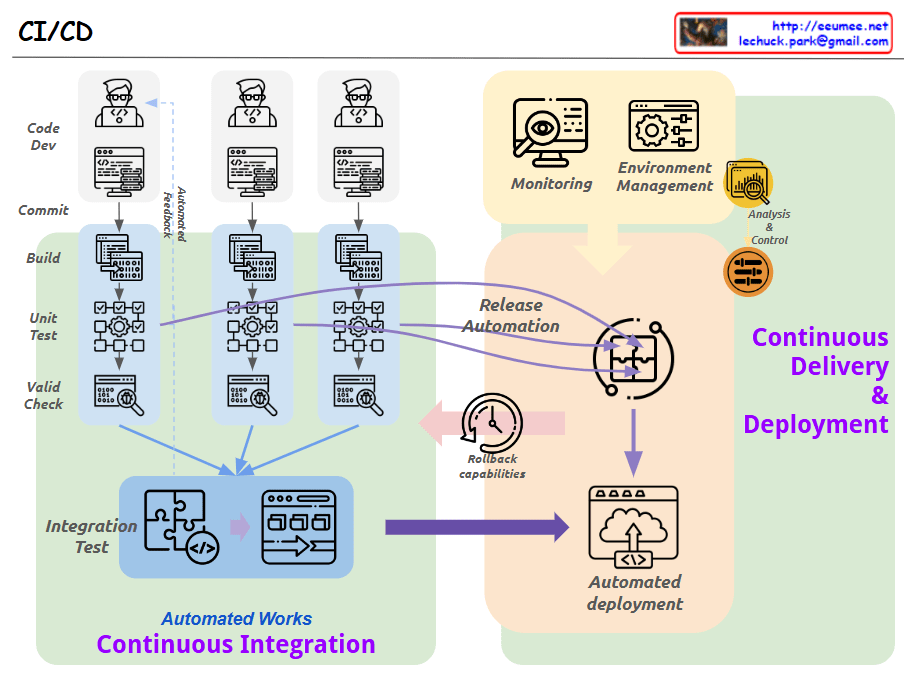

Let me explain this CI/CD (Continuous Integration/Continuous Delivery & Deployment) pipeline diagram:

This diagram illustrates the automated workflow in modern software development, from code creation to deployment. Each stage is automated, improving the efficiency and reliability of the development process.

Key highlights:

The flow shows three parallel development streams that converge into integration testing, followed by release automation and deployment. The entire process is monitored and controlled with proper environment management.

This CI/CD pipeline is crucial in modern DevOps practices, helping organizations:

The pipeline emphasizes automation at every stage, making software development more efficient and reliable while maintaining quality control throughout the process.

From Claude with some prompting

explain this diagram of RAID 0 (Striping):

RAID 0 is best suited for situations where high performance is crucial but data safety is less critical, such as temporary work files, cache storage, or environments where data can be easily recreated or restored from another source.