With a Claude

the Software Defined Power Distribution (SDPD) system, including the added standards and protocols shown in the image:

- SDN Similarity

- Like Software-Defined Networking controls network traffic, SDPD applies similar software-defined principles to power distribution

- Key Components

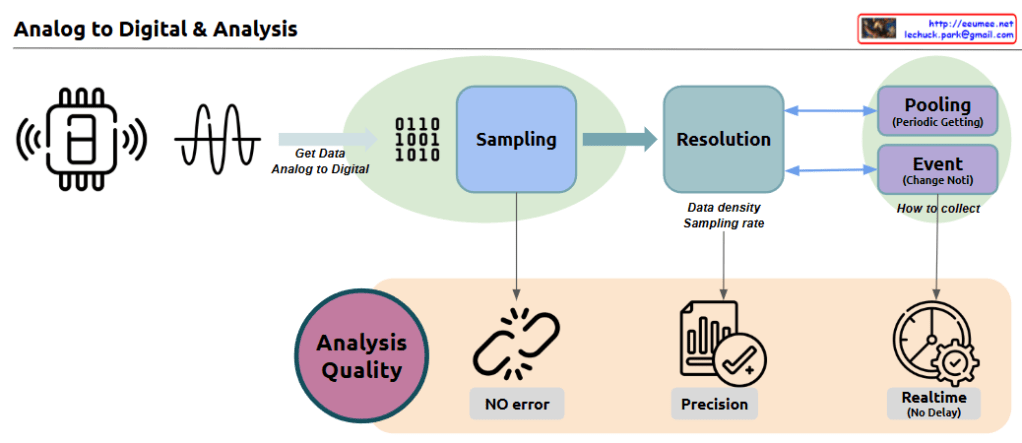

- Real-time Monitoring: Power consumption and system status analysis using IoT sensors and AI

- Centralized Control: Power distribution optimization through an integrated platform

- Flexibility/Scalability: Software-based upgrades and expansion

- Energy Efficiency: Data center power optimization and rapid fault response

- Standards and Protocols

- IEC 61850: Substation automation communication standard

- IEEE 2030.5: Smart energy profile standard

- Modbus/DNP3: Industrial communication protocols

- OpenADR: Automated demand response standard

Final Summary: Why Software Defined X (SDx) is necessary for power distribution

- Modern power systems face increasing complexity and require real-time response capabilities

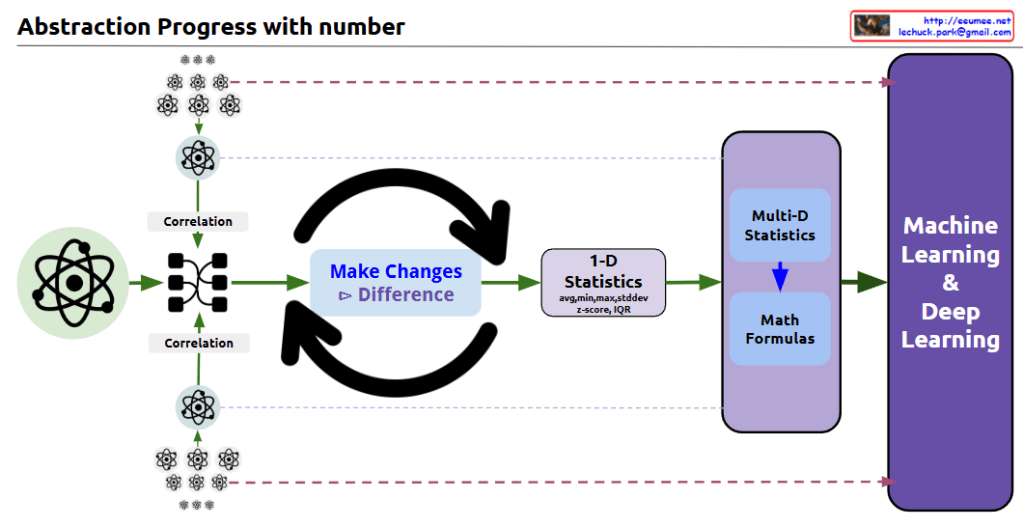

- Data-driven decision making and automated control are essential

- Software Defined approach (SDPD) provides:

- Real-time data collection/analysis for optimized power flow

- Rapid response and efficient management through centralized control

- Flexible system expansion and upgrades through software-based architecture

- Achievement of improved energy efficiency and reduced operational costs

The software-defined approach has become essential in the power sector, just as it has in networking, because it enables:

- Intelligent resource allocation

- Improved system visibility

- Enhanced operational efficiency

- Better fault tolerance and recovery

- Cost-effective scaling and updates

This demonstrates why a data-centric, software-defined approach is crucial for modern power systems to achieve efficiency, reliability, and scalability.