From Claude with some prompting

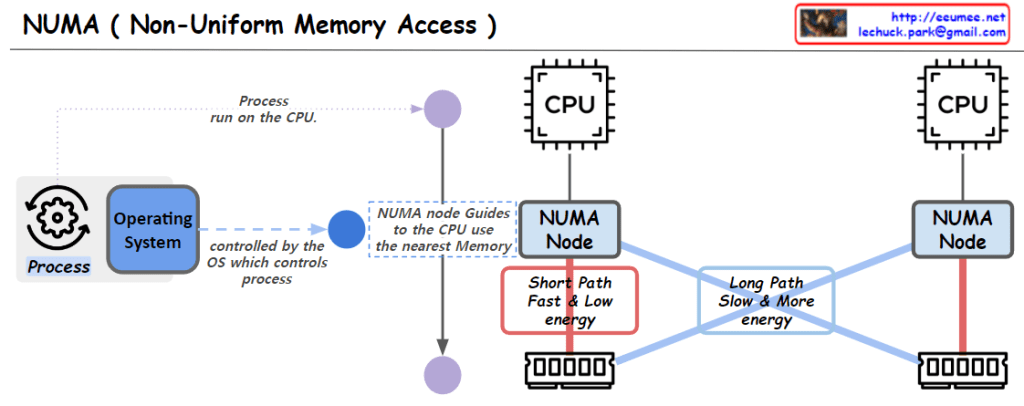

The image explains the memory management and access approaches in computing systems. Fundamentally, for any memory management approach, whether hardware or software, there needs to be a defined unit of operation.

At the hardware level, the physical Memory Access Unit is determined by the CPU’s bit width (32-bit or 64-bit).

At the software/operating system level, the Paging Unit, typically 4KB, is used for virtual memory management through the paging mechanism.

Building upon these foundational units, additional memory management techniques are employed to handle memory regions of varying sizes:

- Smaller units: Byte-addressable memory, bit operations, etc.

- Larger units: SLAB allocation, Buddy System, etc.

Essentially, the existence of well-defined units at the hardware and logical/software layers is a prerequisite that enables comprehensive and scalable memory management. These units serve as the basis for memory control mechanisms across different levels of abstraction and size requirements in computing systems.