Visual Analysis: RNN vs Transformer

Visual Structure Comparison

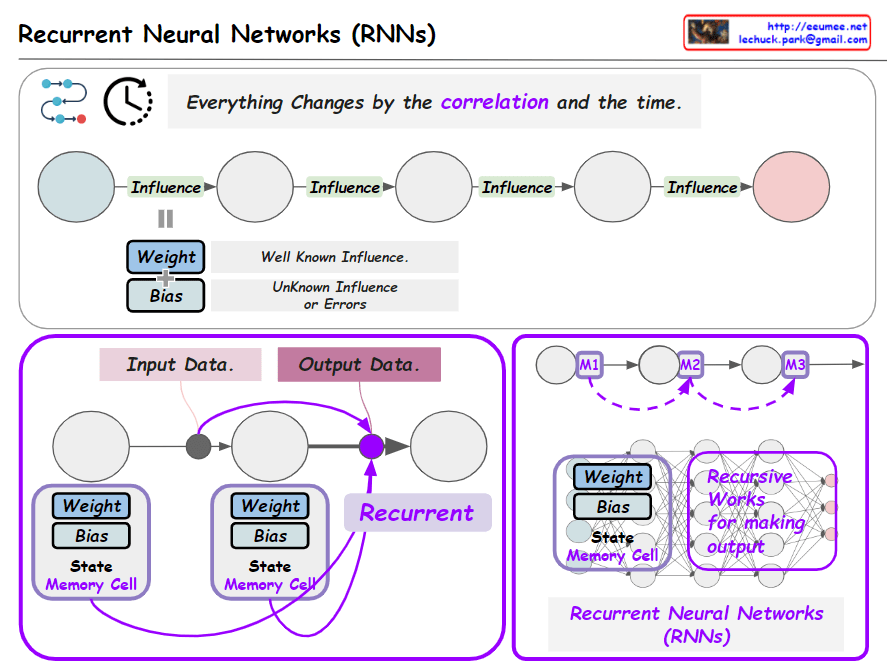

RNN (Top): Sequential Chain

- Linear flow: Circular nodes connected left-to-right

- Hidden states: Each node processes sequentially

- Attention weights: Numbers (2,5,11,4,2) show token importance

- Bottleneck: Must process one token at a time

Transformer (Bottom): Parallel Grid

- Matrix layout: 5×5 grid of interconnected nodes

- Self-attention: All tokens connect to all others simultaneously

- Multi-head: 5 parallel attention heads working together

- Position encoding: Separate blue boxes handle sequence order

Key Visual Insights

Processing Pattern

- RNN: Linear chain → Sequential dependency

- Transformer: Interconnected grid → Parallel freedom

Information Flow

- RNN: Single path with accumulating states

- Transformer: Multiple simultaneous pathways

Attention Mechanism

- RNN: Weights applied to existing sequence

- Transformer: Direct connections between all elements

Design Effectiveness

The diagram succeeds by using:

- Contrasting layouts to show architectural differences

- Color coding to highlight attention mechanisms

- Clear labels (“Sequential” vs “Parallel Processing”)

- Visual metaphors that make complex concepts intuitive

The grid vs chain visualization immediately conveys why Transformers enable faster, more scalable processing than RNNs.

Summary

This diagram effectively illustrates the fundamental shift from sequential to parallel processing in neural architecture. The visual contrast between RNN’s linear chain and Transformer’s interconnected grid clearly demonstrates why Transformers revolutionized AI by enabling massive parallelization and better long-range dependencies.

With Claude