Change-Based Monitoring System Analysis

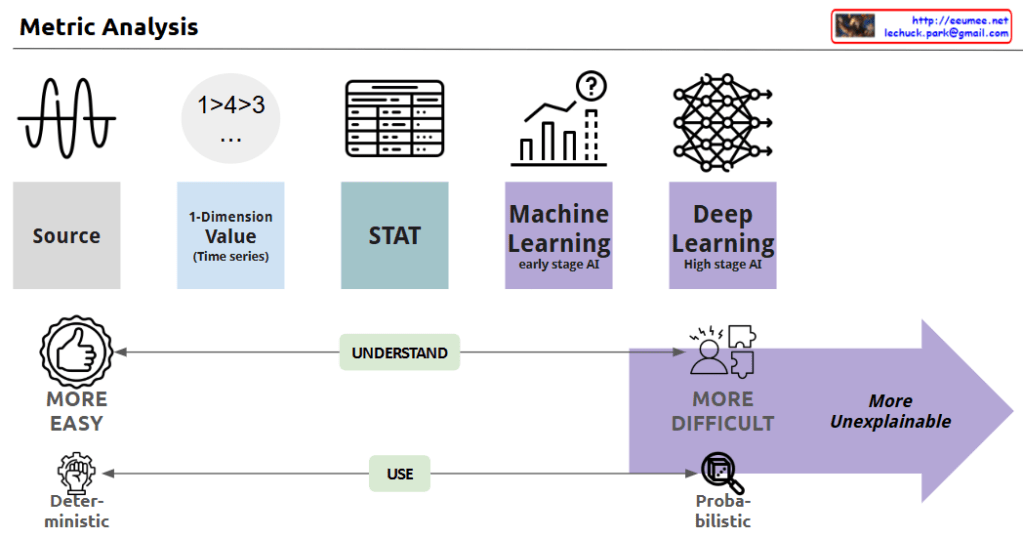

This diagram illustrates a systematic framework for “Monitoring is from changes.” The approach demonstrates a hierarchical structure that begins with simple, certain methods and progresses toward increasingly complex analytical techniques.

Flow of Major Analysis Stages:

- One Change Detection:

- The most fundamental level, identifying simple fluctuations such as numerical changes (5→7).

- This stage focuses on capturing immediate and clear variations.

- Trend Analysis:

- Recognizes data patterns over time.

- Moves beyond single changes to understand the directionality and flow of data.

- Statistical Analysis:

- Employs deeper mathematical approaches to interpret data.

- Utilizes means, variances, correlations, and other statistical measures to derive meaning.

- Deep Learning:

- The most sophisticated analysis stage, using advanced algorithms to discover hidden patterns.

- Capable of learning complex relationships from large volumes of data.

Evolution Flow of Detection Processes:

- Change Detection:

- The initial stage of detecting basic changes occurring in the system.

- Identifies numerical variations that deviate from baseline values (e.g., 5→7).

- Change detection serves as the starting point for the monitoring process and forms the foundation for more complex analyses.

- Anomaly Detection:

- A more advanced form than change detection, identifying abnormal data points that deviate from general patterns or expected ranges.

- Illustrated in the diagram with a warning icon, representing early signs of potential issues.

- Utilizes statistical analysis and trend data to detect phenomena outside the normal range.

- Abnormal (Error) Detection:

- The most severe level of detection, identifying actual errors or failures within the system.

- Shown in the diagram with an X mark, signifying critical issues requiring immediate action.

- May be classified as a failure when anomaly detection persists or exceeds thresholds.

Supporting Functions:

- Adding New Relative Data: Continuously collecting relevant data to improve analytical accuracy.

- Higher Resolution: Utilizing more granular data to enhance analytical precision.

This framework demonstrates a logical progression from simple and certain to gradually more complex analyses. The hierarchical structure of the detection process—from change detection through anomaly detection to error detection—shows how monitoring systems identify and respond to increasingly serious issues.

With Claude