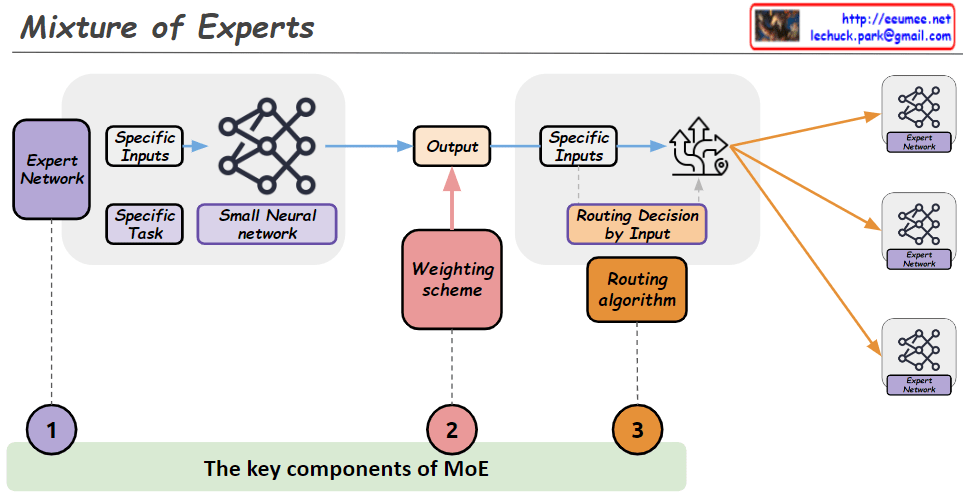

This image depicts a conceptual diagram of the “MOE (Mixture of Expert)” system, effectively illustrating the similarities between human expert collaboration structures and AI model MoE architectures.

The key points of the diagram are:

- The upper section shows a traditional human expert collaboration model:

- A user presents a complex problem (“Please analyze the problem now”)

- An intermediary agent distributes this to appropriate experts (A, B, C Experts)

- Each expert analyzes the problem and provides solutions from their specialized domain

- The lower section demonstrates how this same structure is implemented in the AI world:

- When a user’s question or command is input

- The LLM Foundation Expert Model processes it

- The Routing Expert Model distributes tasks to appropriate specialized models (A, B, C Expert Models)

This diagram emphasizes that human expert systems and AI MoE architectures are fundamentally similar. The approach of utilizing multiple experts’ knowledge to solve complex problems has been used in human settings for a long time, and the AI MoE structure applies this human-centered collaborative model to AI systems. The core message of this diagram is that AI models are essentially performing the roles that human experts would traditionally fulfill.

This perspective suggests that mimicking human problem-solving approaches can be effective in AI system design.

With Claude