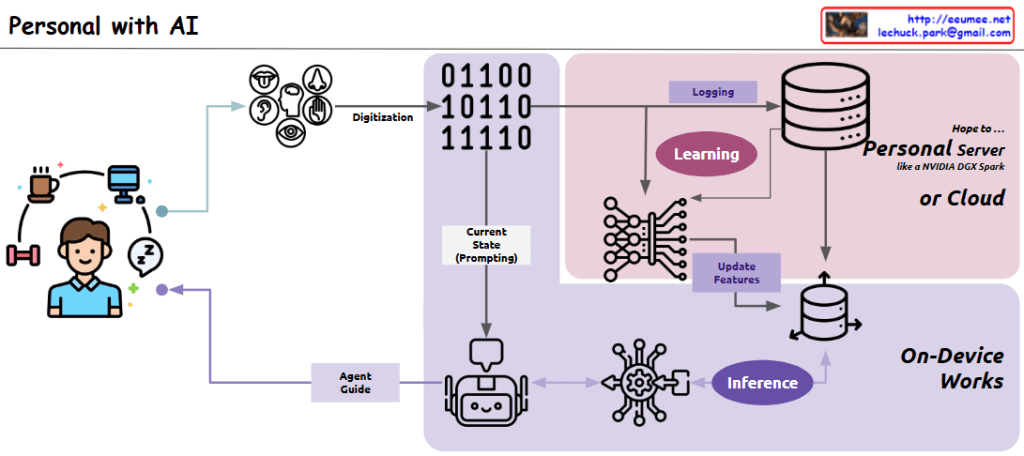

This diagram illustrates a “Personal Agent” system architecture that shows how everyday life is digitized to create an AI-based personal assistant:

Left side: The user’s daily activities (coffee, computer, exercise, sleep) are represented, which serve as the source for digitization.

Center-left: Various sensors (visual, auditory, tactile, olfactory, gustatory) capture the user’s daily activities and convert them through the “Digitization” process.

Center: The “Current State (Prompting)” component stores the digitized current state data, which is provided as prompting information to the AI agent.

Upper right (pink area): Two key processes take place:

- “Learning”: Processing user data from an ML/LLM perspective

- “Logging”: Continuously collecting data to update the vector database

This section runs on a “Personal Server or Cloud,” preferably using a personalized GPU server like NVIDIA DGX Spark, or alternatively in a cloud environment.

Lower right: In the “On-Device Works” area, the “Inference” process occurs. Based on current state data, the AI agent infers guidance needed for the user, and this process is handled directly on the user’s personal device.

Center bottom: The cute robot icon represents the AI agent, which provides personalized guidance to the user through the “Agent Guide” component.

Overall, this system has a cyclical structure that digitizes the user’s daily life, learns from that data to continuously update a personalized vector database, and uses the current state as a basis for the AI agent to provide customized guidance through an inference process that runs on-device.

with Claude