From DALL-E With some prompting

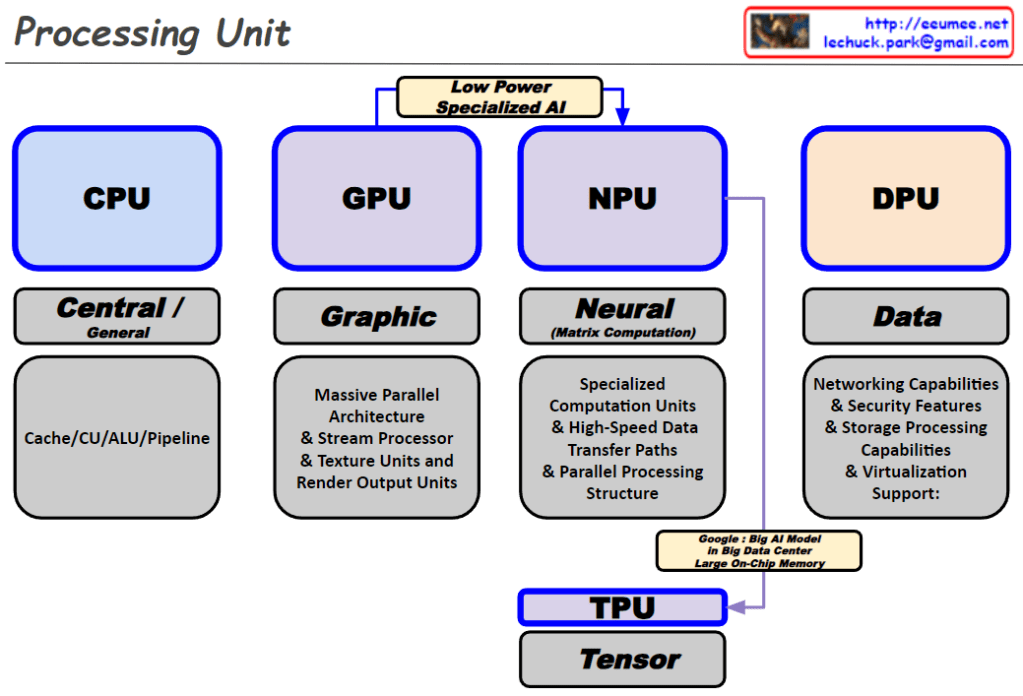

Processing Unit

- CPU (Central Processing Unit): Central / General

- Cache/Control Unit (CU)/Arithmetic Logic Unit (ALU)/Pipeline

- GPU (Graphics Processing Unit): Graphic

- Massive Parallel Architecture

- Stream Processor & Texture Units and Render Output Units

- NPU (Neural Processing Unit): Neural (Matrix Computation)

- Specialized Computation Units

- High-Speed Data Transfer Paths

- Parallel Processing Structure

- DPU (Data Processing Unit): Data

- Networking Capabilities & Security Features

- Storage Processing Capabilities

- Virtualization Support

- TPU (Tensor Processing Unit): Tensor

- Tensor Cores

- Large On-Chip Memory

- Parallel Data Paths

Additional Information:

- NPU and TPU are differentiated by their low power, specialized AI purpose.

- TPU is developed by Google for large AI models in big data centers and features large on-chip memory.

The diagram emphasizes the specialized nature of NPU and TPU for AI tasks, highlighting their low power consumption and specialized computation capabilities, particularly for neural and tensor computations. It also contrasts these with the more general-purpose capabilities of CPUs and the graphic processing orientation of GPUs. DPU is presented as specialized for handling data-centric tasks involving networking, security, and storage in virtualized environments.